So check out this code, what’s going on here?

begin transaction insert d1.dbo.T1 values (1); insert d2.dbo.T1 values (1); commit |

The transaction is touching two different databases. So it makes sense that the two actions should be atomic and durable together using the one single transaction.

However, databases implement durability and atomicity using their own transaction log. Each transaction log takes care of its own database. So from another point of view, it makes sense that these are two separate transactions.

Which is it? Two transaction or one transaction?

It’s One Transaction (Mostly)

Microsoft’s docs are pretty clear (Thanks Mladen Prajdic for pointing me to it). Distributed Transactions (Database Engine) says:

A transaction within a single instance of the Database Engine that spans two or more databases is actually a distributed transaction. The instance manages the distributed transaction internally; to the user, it operates as a local transaction.

I can actually see that happening with this demo script:

use master if exists (select * from sys.databases where name = 'D1') begin alter database D1 set single_user with rollback immediate; drop database D1; end go if exists (select * from sys.databases where name = 'D2') begin alter database D2 set single_user with rollback immediate; drop database D2; end go create database d1; go create database d2; go create table d1.dbo.T1 (id int); create table d2.dbo.T1 (id int); go use d1; CHECKPOINT; go begin transaction insert d1.dbo.T1 values (1); insert d2.dbo.T1 values (1); commit select [Transaction ID], [Transaction Name], Operation, Context, [Description] from fn_dblog(null, null); |

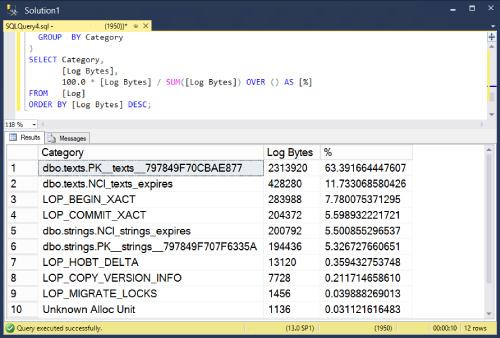

That shows a piece of what’s going on in the transaction log like this:

If you’re familiar with fn_dblog output (or even if you’re not), notice that when a transaction touches two databases, there are extra entries in the transaction log. D1 has LOP_PREP_XACT and LOP_FORGET_XACT and D2 only has LOP_PREP_XACT. Grahaeme Ross wrote a lot more about what this means in his article Understanding Cross-Database Transactions in SQL Server

Well that’s good. I can count on that can’t I?

Except When …

You Break Atomicity On Purpose

Well, they are two databases after all. If you want to restore one database to a point in time before the transaction occurred but not the other, I’m not going to stop you.

Availability Groups

But there’s another wrench to throw in with Availability Groups. Again Microsoft’s docs are pretty clear on this (Thanks Brent for pointing me to them). In Transactions – availability groups and database mirroring they point out this kind of thing is pretty new:

In SQL Server 2016 SP1 and before, cross-database transactions within the same SQL Server instance are not supported for availability groups.

There’s support in newer versions, but the availability group had to have been created with WITH DTC_SUPPORT = PER_DB. There’s no altering the availability group after it’s been created.

It’s also interesting that availability groups’ older brother, database mirroring is absolutely not supported. Microsoft says so several times and wants you to know that if you try and you mess up, it’s on you:

… any issues arising from the improper use of distributed transactions are not supported.

Long story short:

- Cross DB Transactions in the same server are supported with Availability Groups in SQL Server 2017 and later

- Cross DB Transactions are not supported with mirrored databases at all