Five Ways Time Makes Unit Tests Flaky

I explore different sources of test flakiness related to time:

A flaky test is a unit test that sometimes passes and sometimes fails. The causes of these flaky tests are often elusive because they’re not consistently reproducible.

I’ve found that unit tests that deal with dates and times are notorious for being flaky – especially such tests that talk to SQL Server. I want to explore some of the reasons this can happen.

My Setup

All scripts and code samples are available

on github.

In the examples I discuss below, I’m using a table defined like this:

CREATE TABLE dbo.MESSAGE_LOG

(

LogId INT IDENTITY NOT NULL

PRIMARY KEY,

LogMessage NVARCHAR(MAX) NOT NULL,

CreatedDate DATETIME

DEFAULT (SYSDATETIME())

) |

CREATE TABLE dbo.MESSAGE_LOG

(

LogId INT IDENTITY NOT NULL

PRIMARY KEY,

LogMessage NVARCHAR(MAX) NOT NULL,

CreatedDate DATETIME

DEFAULT (SYSDATETIME())

)

I also wrote some methods that execute these queries:

AddLogMessage

INSERT dbo.MESSAGE_LOG(LogMessage)

OUTPUT inserted.LogId

VALUES (@Message); |

INSERT dbo.MESSAGE_LOG(LogMessage)

OUTPUT inserted.LogId

VALUES (@Message);

AddLogMessageWithDate

Same method but this allows the application to supply the LastUpdate value

INSERT dbo.MESSAGE_LOG(LogMessage, LastUpdate)

OUTPUT inserted.LogId

VALUES (@Message, @LastUpdate) |

INSERT dbo.MESSAGE_LOG(LogMessage, LastUpdate)

OUTPUT inserted.LogId

VALUES (@Message, @LastUpdate)

UpdateLogMessage

UPDATE dbo.MESSAGE_LOG

SET Message = @Message,

LastUpdatedTime = SYSDATETIME()

WHERE LogId = @LogId |

UPDATE dbo.MESSAGE_LOG

SET Message = @Message,

LastUpdatedTime = SYSDATETIME()

WHERE LogId = @LogId

Sources of Flaky Tests

In no particular order:

Tests Run Too Quick?

The following test checks to see that UpdateMessage updated the LastUpdate column.

[Test]

public void UpdateMessage_DateIsUpdated_1() {

string message = Guid.NewGuid().ToString();

int logId = m_data.AddLogMessage( message );

LogMessageDto? dto = m_data.GetLogMessage( logId );

DateTime createdDate = dto.LastUpdate;

string newMessage = Guid.NewGuid().ToString();

m_data.UpdateLogMessage( logId, newMessage );

dto = m_data.GetLogMessage( logId );

DateTime updatedDate = dto.LastUpdate;

// The following assertion may fail!

// updatedDate and createdDate are Equal if the server is fast enough

Assert.Greater( updatedDate, createdDate );

} |

[Test]

public void UpdateMessage_DateIsUpdated_1() {

string message = Guid.NewGuid().ToString();

int logId = m_data.AddLogMessage( message );

LogMessageDto? dto = m_data.GetLogMessage( logId );

DateTime createdDate = dto.LastUpdate;

string newMessage = Guid.NewGuid().ToString();

m_data.UpdateLogMessage( logId, newMessage );

dto = m_data.GetLogMessage( logId );

DateTime updatedDate = dto.LastUpdate;

// The following assertion may fail!

// updatedDate and createdDate are Equal if the server is fast enough

Assert.Greater( updatedDate, createdDate );

}

The test ran so quickly that updatedDate has the same value as createdDate. This test may fail with this error message:

Failed UpdateMessage_DateIsUpdated [55 ms]

Error Message:

Expected: greater than 2022-02-05 15:18:10.33

But was: 2022-02-05 15:18:10.33

It’s tempting to to get around this by adding a Thread.Sleep call between the insert and update. I don’t recommend it. That kind of pattern adds up and really lengthens the time it takes to run all tests.

Another solution might involve changing Greater to GreaterOrEqual but then we can’t verify that the value has actually been updated.

Storing dates using a more precise datetime type like DATETIME2 may help avoid more failures, but maybe not all failures.

The Right Way

Ideally we want to set up the test case such that the LastUpdate value is a constant date that’s definitely in the past. I would change this test to use AddLogMessageWithDate instead of AddLogMessage:

DateTime then = new DateTime(2000, 01, 01);

int logId = m_data.AddLogMessageWithDate( message, then ); |

DateTime then = new DateTime(2000, 01, 01);

int logId = m_data.AddLogMessageWithDate( message, then );

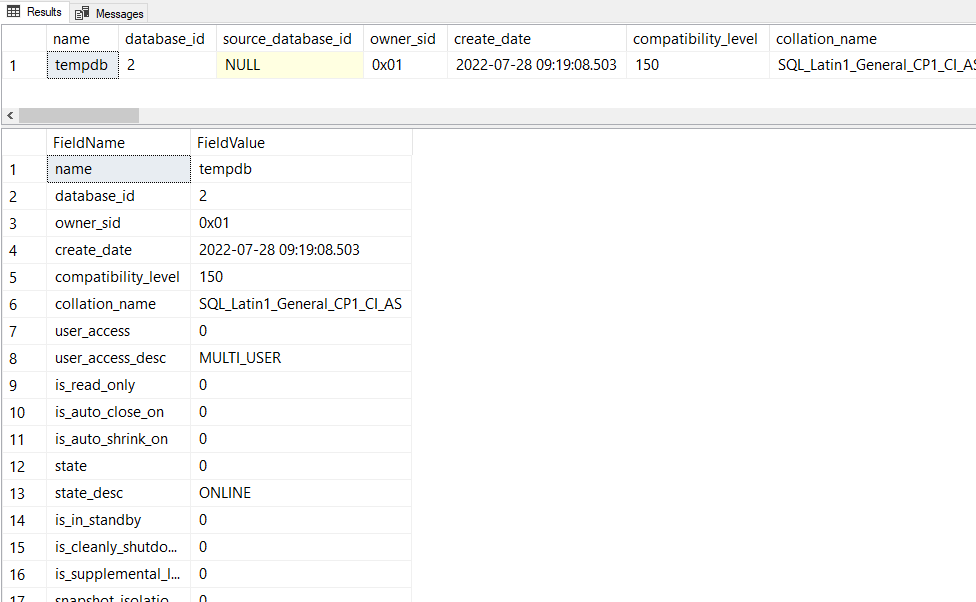

Not All DateTimes Are Created Equal

.Net’s

DateTime is different than SQL Server’s

DATETIME. Specifically they have different precisions. DateTime values in SQL Server are

rounded to increments of .000, .003, or .007 seconds. This means that you can’t store a .Net DateTime value in SQL Server and get it back. This test demonstrates the problem:

[Test]

public void StoreDate_ReadItBack() {

// Store date

string message = Guid.NewGuid().ToString();

DateTime now = DateTime.Now;

int logId = m_data.AddLogMessageWithDate( message, now );

// Read it back

LogMessageDto? dto = m_data.GetLogMessage( logId );

// The following assertion may fail!

// SQL Server's DATETIME has a different precision than .Net's DateTime

Assert.AreEqual( now, dto.LastUpdate );

} |

[Test]

public void StoreDate_ReadItBack() {

// Store date

string message = Guid.NewGuid().ToString();

DateTime now = DateTime.Now;

int logId = m_data.AddLogMessageWithDate( message, now );

// Read it back

LogMessageDto? dto = m_data.GetLogMessage( logId );

// The following assertion may fail!

// SQL Server's DATETIME has a different precision than .Net's DateTime

Assert.AreEqual( now, dto.LastUpdate );

}

It may fail with:

Failed StoreDate_ReadItBack [101 ms]

Error Message:

Expected: 2022-02-04 15:11:20.4474577

But was: 2022-02-04 15:11:20.447

The Right Way

Understanding the resolution limitations of SQL Server’s DateTime is important here. A few solutions come to mind:

- Maybe use a constant value instead of “now”

- Modify the database columns to use SQL Server’s

DATETIME2 which has a better resolution

- Just fetch “now” from the database. I like this idea. When I use it again later, I’ll go into more detail.

Time Zones (Of Course)

Running integration tests that talk to a database on a separate servercan mean translating server times back and forth between both servers. This leads to another common source of flakiness: time zones. It’s not easy to avoid this kind of issue. Both Azure and AWS try to tackle this by use UTC everywhere.

A flaky test might look like this.

public void UpdateMessage_DateIsUpdated_2() {

string message = Guid.NewGuid().ToString();

DateTime now = DateTime.Now;

int logId = m_data.AddLogMessageWithDate( message, now );

string newMessage = Guid.NewGuid().ToString();

m_data.UpdateLogMessage( logId, newMessage );

LogMessageDto? dto = m_data.GetLogMessage( logId );

// This next assertion can fail if the database is in a different time zone

Assert.GreaterOrEqual( dto.LastUpdate, now );

} |

public void UpdateMessage_DateIsUpdated_2() {

string message = Guid.NewGuid().ToString();

DateTime now = DateTime.Now;

int logId = m_data.AddLogMessageWithDate( message, now );

string newMessage = Guid.NewGuid().ToString();

m_data.UpdateLogMessage( logId, newMessage );

LogMessageDto? dto = m_data.GetLogMessage( logId );

// This next assertion can fail if the database is in a different time zone

Assert.GreaterOrEqual( dto.LastUpdate, now );

}

It fails like this:

Failed UpdateMessage_DateIsUpdated_2 [19 ms]

Error Message:

Expected: greater than or equal to 2022-02-05 21:06:54.521464

But was: 2022-02-05 16:06:54.52

Why is this pattern a source of flaky tests? The success of the test depends on the time zones of the test server and the database server. But even if you control both time zones, this particular example is still vulnerable to clock drift as we’ll see later.

The Right Way

Use a constant time or try fetching “now” from the database.

DateTime now = m_nowProvider.Now(); |

DateTime now = m_nowProvider.Now();

Here I’m using a method I wrote which simply returns the value of SELECT GETDATE(); from the database.

Clock Drift

Related to time zones is clock drift which again causes errors when you compare dates from two different servers.

No server’s clock is perfect and I like to think of each server’s clock as having its own time zone. Windows tells me that my laptop is set at (UTC -05:00) but with clock drift it’s probably something like (UTC -05:00:01.3). You can work at synchronizing clocks, but unless you’re testing that synchronization, you shouldn’t depend on it in your tests.

Just like in the case with time zones, this test may fail when it compares times from two different clocks:

public void UpdateMessage_DateIsUpdated_3() {

string message = Guid.NewGuid().ToString();

DateTime now = DateTime.Now;

int logId = m_data.AddLogMessageWithDate( message, now );

string newMessage = Guid.NewGuid().ToString();

m_data.UpdateLogMessage( logId, newMessage );

LogMessageDto? dto = m_data.GetLogMessage( logId );

// This next test can fail if the clocks on the database server is off by a few seconds

Assert.GreaterOrEqual( dto.LastUpdate, now );

} |

public void UpdateMessage_DateIsUpdated_3() {

string message = Guid.NewGuid().ToString();

DateTime now = DateTime.Now;

int logId = m_data.AddLogMessageWithDate( message, now );

string newMessage = Guid.NewGuid().ToString();

m_data.UpdateLogMessage( logId, newMessage );

LogMessageDto? dto = m_data.GetLogMessage( logId );

// This next test can fail if the clocks on the database server is off by a few seconds

Assert.GreaterOrEqual( dto.LastUpdate, now );

}

The Right Way

Just like before, use a constant value or try fetching “now” from the database.

DateTime now = m_nowProvider.Now(); |

DateTime now = m_nowProvider.Now();

This way we’re comparing times from only one server’s clock.

Daylight Savings (Of Course)

This next test is flaky because of daylight savings time. It’s not specific to SQL Server but I thought I’d include it because I have been burned by this before:

[Test]

public void StoreDateInTheFuture() {

string message = Guid.NewGuid().ToString();

DateTime inAMonth = DateTime.Now + TimeSpan.FromDays( 30 );

// CovertTime may fail because "a month from now" may be an invalid DateTime (with daylight savings)

inAMonth = TimeZoneInfo.ConvertTime( inAMonth, TimeZoneInfo.Local );

m_data.AddLogMessageWithDate( message, inAMonth );

Assert.Pass();

} |

[Test]

public void StoreDateInTheFuture() {

string message = Guid.NewGuid().ToString();

DateTime inAMonth = DateTime.Now + TimeSpan.FromDays( 30 );

// CovertTime may fail because "a month from now" may be an invalid DateTime (with daylight savings)

inAMonth = TimeZoneInfo.ConvertTime( inAMonth, TimeZoneInfo.Local );

m_data.AddLogMessageWithDate( message, inAMonth );

Assert.Pass();

}

I saw a test just like this one fail at 2:18 AM on February 9th, 2018. Adding 30 days to that date brought us to 2:18AM which was right in the middle of the hour we were skipping for daylight savings time and that’s what caused the error. This test fails with:

Failed StoreDateInTheFuture [32 ms]

Error Message:

System.ArgumentException : The supplied DateTime represents an invalid time. For example, when the clock is adjusted forward, any time in the period that is skipped is invalid. (Parameter ‘dateTime’)

Summary

Flaky tests come from non-deterministic tests. To

quote Martin Fowler, “Few things are more non-deterministic than a call to the system clock”. Try to:

- Write tests with hard coded dates

- Avoid comparing dates sourced from two different clocks

- Consider writing a “NowProvider” (which can be mocked!)

- Be very deliberate about time zones

- Be very deliberate about data types (both in C# and SQL Server)