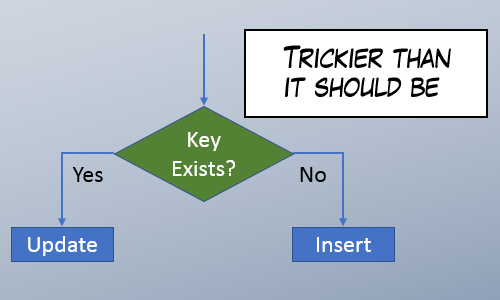

Good ways and bad ways to update or insert rows

- The Setup

- AntiPatterns

- Vanilla Solution

- MERGE Statement

- Inside a Transaction

- Inside a Serializable Transaction

- Using IGNORE_DUP_KEY

- Patterns

- Inside a Transaction With Lock Hints (Update More Common)

- Inside a Transaction With Lock Hints (Insert More Common)

- MERGE Statement With Serializable

- Just Do It

- Performance and Other Concerns

In 2015, Postgres added support for ON CONFLICT DO UPDATE to their INSERT statements. Their docs say that using this syntax guarantees an atomic INSERT or UPDATE outcome; one of those two outcomes is guaranteed, even under high concurrency.

Now, that is one amazing feature isn’t it? Postgres worked really hard to include that feature and kudos to them. I would trade ten features like stretch db for one feature like this. It makes a very common use case simple. And it takes away the awkwardness from the developer.

But if you work with SQL Server, the awkwardness remains and you have to take care of doing UPSERT correctly under high concurrency.

I wrote a post in 2011 called Mythbusting: Concurrent Update/Insert Solutions. But since then, I learned new things, and people have suggested new UPSERT methods. I wanted to bring all those ideas together on one page. Something definitive that I can link to in the future.

The Setup

I’m going consider multiple definitions of a procedure called s_AccountDetails_Upsert. The procedure will attempt to perform an UPDATE or INSERT to this table:

CREATE TABLE dbo.AccountDetails ( Email NVARCHAR(400) NOT NULL CONSTRAINT PK_AccountDetails PRIMARY KEY, Created DATETIME NOT NULL DEFAULT GETUTCDATE(), Etc NVARCHAR(MAX) ); |

Then I’m going to test each definition by using a tool like SQL Query Stress to run this code

declare @rand float = rand() + DATEPART( second, getdate() ); declare @Email nvarchar(330) = cast( cast( @rand * 100 as int) as nvarchar(100) ) + '@somewhere.com'; declare @Etc nvarchar(max) = cast( newid() as nvarchar(max) ); exec dbo.s_AccountDetails_Upsert @Email, @Etc; |

Antipattern: Vanilla Solution

If you don’t care about concurrency, this is a simple implementation. Nothing fancy here:

CREATE PROCEDURE s_AccountDetails_Upsert ( @Email nvarchar(4000), @Etc nvarchar(max) ) AS IF EXISTS ( SELECT * FROM dbo.AccountDetails WHERE Email = @Email ) UPDATE dbo.AccountDetails SET Etc = @Etc WHERE Email = @Email; ELSE INSERT dbo.AccountDetails ( Email, Etc ) VALUES ( @Email, @Etc ); |

Unfortunately, under high concurrency, this procedure fails with primary key violations.

Antipattern: MERGE Statement

The MERGE statement still suffers from the same concurrency issues as the vanilla solution.

CREATE PROCEDURE s_AccountDetails_Upsert ( @Email nvarchar(4000), @Etc nvarchar(max) ) AS MERGE dbo.AccountDetails AS myTarget USING (SELECT @Email Email, @Etc etc) AS mySource ON mySource.Email = myTarget.Email WHEN MATCHED THEN UPDATE SET etc = mySource.etc WHEN NOT MATCHED THEN INSERT (Email, Etc) VALUES (@Email, @Etc); |

Primary Key violations are still generated under high concurrency. Even though it’s a single statement, it’s not isolated enough.

Antipattern: Inside a Transaction

If you try putting the vanilla solution inside a transaction, it makes the whole thing atomic, but still not isolated. You still get primary key violations:

CREATE PROCEDURE s_AccountDetails_Upsert ( @Email nvarchar(4000), @Etc nvarchar(max) ) AS BEGIN TRAN IF EXISTS ( SELECT * FROM dbo.AccountDetails WHERE Email = @Email ) UPDATE dbo.AccountDetails SET Etc = @Etc WHERE Email = @Email; ELSE INSERT dbo.AccountDetails ( Email, Etc ) VALUES ( @Email, @Etc ); COMMIT |

Antipattern: Inside a Serializable Transaction

So this should be isolated enough, but now it’s vulnerable to deadlocks:

CREATE PROCEDURE s_AccountDetails_Upsert ( @Email nvarchar(4000), @Etc nvarchar(max) ) AS SET TRANSACTION ISOLATION LEVEL SERIALIZABLE BEGIN TRAN IF EXISTS ( SELECT * FROM dbo.AccountDetails WHERE Email = @Email ) UPDATE dbo.AccountDetails SET Etc = @Etc WHERE Email = @Email; ELSE INSERT dbo.AccountDetails ( Email, Etc ) VALUES ( @Email, @Etc ); COMMIT |

Antipattern: Using IGNORE_DUP_KEY

I want to mention one more bad solution before I move on to the good solutions. It works and it’s interesting, but it’s a bad idea.

ALTER TABLE dbo.AccountDetails DROP CONSTRAINT PK_AccountDetails; ALTER TABLE dbo.AccountDetails ADD CONSTRAINT PK_AccountDetails PRIMARY KEY ( Email ) WITH ( IGNORE_DUP_KEY = ON ) GO CREATE PROCEDURE dbo.s_AccountDetails_Upsert ( @Email nvarchar(4000), @Etc nvarchar(max) ) AS -- This statement will not insert if there's already a duplicate row, -- But it won't raise an error either INSERT dbo.AccountDetails ( Email, Etc ) VALUES ( @Email, @Etc ); UPDATE dbo.AccountDetails SET @Etc = Etc WHERE @Email = Email; |

So what are some better solutions?

Pattern: Inside a Transaction With Lock Hints (Update More Common)

My prefered solution.

CREATE PROCEDURE s_AccountDetails_Upsert ( @Email nvarchar(4000), @Etc nvarchar(max) ) AS SET XACT_ABORT ON; BEGIN TRAN UPDATE TOP (1) dbo.AccountDetails WITH (UPDLOCK, SERIALIZABLE) SET Etc = @Etc WHERE Email = @Email; IF (@@ROWCOUNT = 0) BEGIN INSERT dbo.AccountDetails ( Email, Etc ) VALUES ( @Email, @Etc ); END COMMIT |

Using some tips from Aaron Bertrand in Please stop using this UPSERT anti-pattern.

This is a solid solution, but every implementation is different so every time you use this pattern, test for concurrency.

Pattern: Inside a Transaction With Lock Hints (Insert More Common)

CREATE PROCEDURE s_AccountDetails_Upsert ( @Email nvarchar(4000), @Etc nvarchar(max) ) AS SET XACT_ABORT ON; BEGIN TRAN INSERT dbo.AccountDetails ( Email, Etc ) SELECT @Email, @Etc WHERE NOT EXISTS ( SELECT * FROM dbo.AccountDetails WITH (UPDLOCK, SERIALIZABLE) WHERE Email = @Email ) IF (@@ROWCOUNT = 0) BEGIN UPDATE TOP (1) dbo.AccountDetails SET Etc = @Etc WHERE Email = @Email; END COMMIT |

Pattern: MERGE Statement With Serializable Isolation

Nice, but be careful with MERGE statements.

CREATE PROCEDURE s_AccountDetails_Upsert ( @Email nvarchar(4000), @Etc nvarchar(max) ) AS SET TRANSACTION ISOLATION LEVEL SERIALIZABLE; MERGE dbo.AccountDetails AS myTarget USING (SELECT @Email Email, @Etc etc) AS mySource ON mySource.Email = myTarget.Email WHEN MATCHED THEN UPDATE SET etc = mySource.etc WHEN NOT MATCHED THEN INSERT (Email, Etc) VALUES (@Email, @Etc); |

For alternative syntax, skip the SET TRANSACTION ISOLATION LEVEL SERIALIZABLE and include a lock hint: MERGE dbo.AccountDetails WITH (HOLDLOCK) AS myTarget.

Pattern: Just Do It

Just try it and catch and swallow any exception

CREATE PROCEDURE s_AccountDetails_Upsert ( @Email nvarchar(4000), @Etc nvarchar(max) ) AS BEGIN TRY INSERT INTO dbo.AccountDetails (Email, Etc) VALUES (@Email, @Etc); END TRY BEGIN CATCH -- ignore duplicate key errors, throw the rest. IF ERROR_NUMBER() IN (2601, 2627) UPDATE dbo.AccountDetails SET Etc = @Etc WHERE Email = @Email; END CATCH |

I wrote a post about it in Ugly Pragmatism For The Win.

And I wrote about a rare problem with it in Case study: Troubleshooting Doomed Transactions.

I have no concurrency concerns here but because of this issue – not to mention performance concerns – it’s no longer my first preference.

Performance and Other Concerns

When describing each pattern so far, I haven’t paid attention to performance, just concurrency. There are many opportunities to improve the performance of these solutions. In particular, pay very close attention to your average use case. If your workload UPDATEs a row 99% of the time and INSERTs a row only 1% of the time, then optimal implementation will look different than if the UPDATE and INSERT frequency is reversed.

Check out these links (thanks Aaron Bertrand)

Nice work as always, sir. Look at you with the table of contents at the top right! Nifty! Try viewing the post in incognito mode in your browser though – there’s two checkboxes for “Notify me of followup comments via email”.

Comment by Brent Ozar — July 20, 2017 @ 2:58 pm

(fixed)

What? You don’t want double the opportunity to be notified that someone on the internet said something!

Michael

Comment by Michael J. Swart — July 20, 2017 @ 3:00 pm

Hi,

I have tried to reproduce the issues, but cannot to do achieve that. Have tried only the vanilla solution.

Could you please share the .sqlstress file?

Which version and edition of SQl Server do you use?

Thanks.

Martin

Comment by Martin — July 26, 2017 @ 5:42 am

Hi Martin,

I think there are a few things I could suggest

tempdb.dbo.s_DoSomething. That’s described under The C# heading on this post: Generating Concurrent Activity. But I recognize that not everyone has a C# compiler on hand.Good luck Martin, let me know what works for you, I’ll update the post.

Comment by Michael J. Swart — July 27, 2017 @ 9:28 am

Great stuff! All SQL devs should read this.

Comment by Alex Friedman — September 12, 2017 @ 11:20 am

I tend to use MERGE with UPDLOCK, HOLDLOCK, ROWLOCK. (And HOLDLOCK = SERIALIZABLE). That packages everything that’s needed up into one statement.

Another credible pattern would be using SERIALIZABLE, any other technique, and retry in case of deadlock.

Comment by tobi — September 12, 2017 @ 1:58 pm

Thanks Tobi,

The retry by the application in case of deadlock is definitely a good pattern. I wish included it.

I think I’m changing my point of view on it too. I used to think that retrying a failure was a last-resort solution and amounted to admitting defeat.

But now I think that retries are a necessary part of the transaction landscape and they becomes almost necessary when considering more distributed solutions, or other high-availability solutions in general.

Comment by Michael J. Swart — September 12, 2017 @ 2:28 pm

Hi Michael,

Have you considered this pattern, it does slightly favour UPDATE over INSERT, but performs well for both?

ALTER PROCEDURE s_AccountDetails_Upsert ( @Email nvarchar(4000), @Etc nvarchar(max) )

AS

SET TRANSACTION ISOLATION LEVEL SERIALIZABLE;

BEGIN TRAN

UPDATE dbo.AccountDetails

SET Etc = @Etc

WHERE Email = @Email

INSERT dbo.AccountDetails ( Email, Etc )

SELECT @Email, @Etc

WHERE @@ROWCOUNT=0

COMMIT

Comment by Mister Magoo — October 5, 2017 @ 8:28 pm

Nice! That does indeed work with no deadlocks.

I like this especially because it avoids hints and the merge statement.

Comment by Michael J. Swart — October 6, 2017 @ 9:27 am

The “Just do it” solution made me think “Thank God I don’t work with this guy”.

Comment by Leo — March 7, 2018 @ 11:42 am

Yeah, the feeling’s mutual.

Comment by Michael J. Swart — March 7, 2018 @ 2:23 pm

Serializable isolation is sort of an anti-pattern, since it hampers concurrent writes to the table being upserted to. Sql server is going to take out a range lock on the key field.

For the sake of concurrency, a better pattern is to use sp_getapplock, where you can lock on your key(s). sp_getapplock is extremely performant, and since you can lock on a single key, concurrency doesn’t take a hit. Here’s the gist of the pattern:

Comment by Toofy Snaddlepuff — October 12, 2018 @ 4:43 pm

Hey there Toofy (great name)

Your experience with sp_getapplock and mine are really really different. In fact, my first reaction to your post was surprise because I’ve always seen sp_getapplock as an enemy of concurrency.

My dumb blog software stripped out the value that you used for @lockName. But I’m assuming it’s email or something. I have to admit that I’ve never thought about using sp_getapplock that way.

Correct me if I’m wrong, but the idea is that two inserts that have different keys, but adjacent key values, would block eachother because of the range lock (with the same ranges).

And your method avoids that by locking the value of the key.

That’s really an cool idea. I’m going to test it out soon.

Comment by Michael J. Swart — October 12, 2018 @ 5:16 pm

OK. I was actually turned onto sp_getapplock by the former chief architect of Ancestry.com. The amount of concurrency that they support was / is ridiculous, and he said he used it all over the place. In fact, concurrency / load was so high, that the Sql Server tiger team that MS sent them told them to yank all foreign keys as rule #1 on a db of that size and load (since fkeys cause concurrency issues when you’re at that scale).

You do need to be careful not to leave a lock hanging open. That’s why I prefer the transaction based approach, where a rollback or commit closes the lock. There can also arise deadlock issues as with any other pessimistic locking strategy.

Granularity of the lock is one benefit, but there are others. For one, you can make the “critical section” as large or small as you like, ie, you can release the lock before the entire batch finishes. Secondly, you’re not locking rows / tables with serializable isolation, which has an impact on other transactions in addition to the current transaction. Remember that serializable isolation includes locking used for repeatable read, which affects reads.

Comment by Toofy Snaddlepuff — October 15, 2018 @ 4:57 pm

You say “under high concurrency” it will fail. But we are talking about probabilities here right? I mean, it will fail under any concurrency load, even if low, just rarer.

Comment by JFO — October 26, 2018 @ 7:10 am

Yes, you’re right that’s a subtle distinction. I measured it once (for blocking not deadlocks) and there does seem to be a threshold where concurrency troubles increase rapidly: https://michaeljswart.com/2016/02/future-proofing-for-concurrency/

Comment by Michael J. Swart — October 26, 2018 @ 7:42 am

Hello!

Your “Just Do It” pattern has a disadvantage that you neglect to mention. If used with IDENTITY columns, it burns an identity value on the failed INSERT. This might not seem like it’s too bad — they’re just integers, and we have plenty of those, right? But the thing is the database is doing work to transactionally create and manage those values. In a high traffic insert pattern, eventually running out of numbers is a real possibility, too.

Maybe it’s beyond the scope of your blog post, but I think it’s important to differentiate between a “soft INSERT” and a true UPSERT. A soft insert means that we have an entity, but we don’t know if it’s in the database just yet. Maybe it is already, or maybe it’s up to us at this time to create that entity so it is recorded for ourselves and everyone else, too. The soft insert differs from a regular insert in that there’s this race to create. The soft insert differs from an UPSERT in that we don’t have data to update if the entity already exists. We contextually know that the first one wins, and we don’t have anything new to add so it’s not up to us — at this time, at least — to update anything if the record already exists.

I think “soft insert” is an important distinction because it should be handled in a very different way than an UPSERT, but it’s not uncommon for people to gravitate toward an UPSERT or MERGE pattern when they really want the subtly different semantics of a soft INSERT.

But back to upserts: I’m surprised you haven’t examined HOLDLOCK or UPDLOCK hints. Is this just an oversight, or do you have a reason to purposefully exclude them?

Comment by Mike Blaszczak — December 28, 2018 @ 11:06 am

Not an oversight. Search for UPDLOCK in this post.

Comment by Michael J. Swart — December 28, 2018 @ 11:16 am

[…] does best. All we need to do is to create a SQL server database with temporal tables, create upsert (a combined insert and update statement) and delete Stored Procedures, and then hook into the […]

Pingback by Sync Sitecore content to external database | Brian Pedersen's Sitecore and .NET Blog — March 6, 2020 @ 4:51 am

These are all still antipatterns, because.

A) You need to make (temporary) stored procedurues

B) Code is 20 times longer than lets say MySql “REPLACE INTO”

C) If you need huge code blocks, you are making antipatterns. The only way to force patterns is to stop using Sql Server until MS adopts “replace into”. We have this power.

Comment by Boris — September 8, 2020 @ 9:09 am

Hey Boris,

A) There’s no need for stored procedures here (temporary or otherwise).

B) I really like postgres’s “ON CONFLICT UPDATE” syntax.

C) Although I also prefer simple code blocks, I would still pick SQL Server over MySql. A syntax quirk is nowhere near close enough to make me change my mind.

Comment by Michael J. Swart — September 8, 2020 @ 9:31 am

did anyone consider using:

CREATE PROCEDURE s_AccountDetails_Upsert ( @Email nvarchar(4000), @Etc nvarchar(max) )

AS

BEGIN

UPDATE dbo.AccountDetails

SET Etc = @Etc

WHERE Email = @Email;

IF @@ROWCOUNT = 0

INSERT dbo.AccountDetails ( Email, Etc )

VALUES ( @Email, @Etc );

END;

GO

Comment by Vladimir Sotirov — September 14, 2020 @ 7:34 pm

Hi Vladimir,

Unfortunately that suffers from the same primary key violations as the vanilla anti pattern.

Comment by Michael J. Swart — September 14, 2020 @ 7:40 pm

Preface: I’m “The DB guy” in my group by simple merit of least ignorance.

Do these options transfer well to a “Get Or Add” style of access? I have an application that will most often run a SELECT statement, but in highly concurrent bursts there will be a scenario where the value does not exist and should be added, while not violating Unique Constraints on the column.

Comment by James — January 20, 2021 @ 2:20 am

Playing with Mister Magoo’s submission above, I feel like this would do the trick, but is it the better way?

[tsql]

CREATE PROCEDURE [dbo].[InsertIfNotExist](@Email varchar(450), @Identity bigint output)

AS

SET TRANSACTION ISOLATION LEVEL SERIALIZABLE;

BEGIN TRAN

select @Identity = EmailId from [UserEmail] where Email = @Email

insert [UserEmail](Email) SELECT @Email WHERE @@ROWCOUNT = 0 SET @Identity = SCOPE_IDENTITY()

COMMIT

[/tsql]

Comment by James — January 20, 2021 @ 2:37 am

@James,

It looks like it might do the trick. I don’t know if it’s more ore less elegant than the other solutions.

As always the only way to know for sure whether it works is to test it.

We also have several Fetch or Create procedures and we use a pattern similar to yours

Comment by Michael J. Swart — January 20, 2021 @ 9:29 am

Hey Michael, thanks for this (and other) blog posts, I’m an avid reader.

I have a question regarding “Inside a Serializable Transaction With Lock Hints” pattern.

I’ve seen this pattern recommended by many experts, and it definitely works, however the reasoning behind it is usually left as an exercise for the reader. What I mean is that:

1) Updlock by itself is held until the end of transaction. So that’s the reason why a transaction is needed. However, it doesn’t have to be a serializable-level transaction, read committed will do just as fine.

2) So the only reason to have a Serializable level transaction – or, equivalently, a holdlock hint – that I see is as a protection (due to range locks) against concurrent inserts by some other thread that DOESN’T USE UPDLOCK (otherwise it would block). It may be a reasonable thing to do, but it seems to be another problem than the one being solved in your blog post. And the best solution probably would be not to use serializable, but rather go and change that other ill-behaved logic to also use updlock if it’s possible.

Am I missing something or is my analysis correct?

Thanks a lot in advance for you thoughts!

Alex

Comment by Alex Sh — January 29, 2021 @ 10:01 am

Hey Alex,

If I were to create a procedure like above that uses (UPDLOCK) inside a transaction (not serializable), I still get primary key violations.

Even though the only thing that’s running are different calls to

s_AccountDetails_UpsertThe reason is that U locks are not adequate is because we want to lock rows that aren’t there. Only range locks can do that.

Imagine the table is empty and two instances come along at the same time using read committed and U locks.

One will see no rows and take no locks. The other has nothing to block on and also sees no rows. Then both will try to insert and one will fail with an attempted PK violation.

So your guess of “otherwise it would block” is the part that I believe you’re missing.

This isn’t something I knew or remembered off the top of my head though. I recreated the objects, added a new version of s_AccountDetails_Upsert based on your suggestion (UPDLOCK inside a read committed transaction) and reran the experiment. I got lots of PK Violations.

Comment by Michael J. Swart — January 29, 2021 @ 11:46 am

Hey Michael!

Indeed, you’re right, thanks a bunch for taking time to figure it out.

One last question: are you using your C#-based concurrency generator, or SQL Query stress? I can’t seem to achieve any PK violations with SQL Query stress, using your suggestion in the comments. It seems C# is the way to go for these type of tests.

Thanks, Alex

Comment by Alex Sh — January 29, 2021 @ 4:44 pm

I was using my C# generator, but I just gave it a go with Sql Query stress and saw the same thing as I saw with my C# app.

I can’t guess what the differences could be between my setup and yours.

Comment by Michael J. Swart — January 29, 2021 @ 5:08 pm

Hi Michael,

You mention that using ‘IGNORE_DUP_KEY’ is an anti-pattern, but don’t explain why. I’m interested in knowing more about that, because we currently use this to perform soft inserts in our telemetry table. We send through a batch of between 1,000 to 10,000 rows at a time, using SqlBulkCopy. We found it makes an incredible difference in speed using SqlBulkCopy + IGNORE_DUP_KEY, vs using SqlCommand to call a stored procedure for each row. We also tried serializing the readings to a json array, so we could call the stored procedure once instead of per row, and that was better but still not as fast as SqlBulkCopy. What would be an alternative way of doing this?

Comment by Andrew Williamson — March 21, 2021 @ 6:51 pm

I don’t like IGNORE_DUP_KEY mostly for academic reasons.

I like to look at a query and by inspection be able to tell you what’s going to happen to the data.

So just like triggers, or ON DELETE CASCADE foreign keys. There’s stuff that goes on behind the scenes.

From my own point of view when I’m troubleshooting queries they’re often queries that I didn’t write. And so it’s a personal preference.

Of course I’m not going to frown at a solution that does it’s job well, so I’m sure it works fine for you. If we worked together, my only request would be that you put a comment in with the query that mentions you’re relying on IGNORE_DUP_KEY (the query, not just the table definition)

Paul White also has some thoughts on the feature.

https://sqlperformance.com/2019/04/sql-performance/ignore_dup_key-slower-clustered-indexes

Comment by Michael J. Swart — March 21, 2021 @ 7:02 pm

Hi, what do you think about this solution:

CREATE PROCEDURE s_AccountDetails_Upsert ( @Email nvarchar(4000), @Etc nvarchar(max) )

AS

IF NOT EXISTS ( SELECT * FROM dbo.AccountDetails WHERE Email = @Email )

BEGIN TRY

INSERT INTO dbo.AccountDetails (Email, Etc) VALUES (@Email, @Etc);

RETURN 0;

END TRY

BEGIN CATCH

-- ignore duplicate key errors, throw the rest.

IF ERROR_NUMBER() NOT IN (2601, 2627)

THROW;

END CATCH

UPDATE dbo.AccountDetails

SET Etc = @Etc

WHERE Email = @Email;

Comment by Martin — June 18, 2021 @ 7:51 am

@Martin,

By inspection it seems okay. One way to find out for sure is to test it.

There are some resources out there on doing tests for concurrency. My own page is https://michaeljswart.com/2014/01/generating-concurrent-activity/ or google SqlQueryStress

Comment by Michael J. Swart — June 24, 2021 @ 3:21 pm

@Michael,

Thanks for the answer. I have tested this solution but with no luck, I mean I couldn’t simulate entering to CATCH block. I have used WAIT FOR TIME on 10 connections in MMS to run this simultaneously (I have added INSERT INTO dbo.Errors table to indicate entering in CATCH block). Now when I am writing this I have an idea to block all connections by using sp_getapplock for example, and when I release it it should go simultaneously. So by now who knows for sure if it is working…

Comment by Martin — July 2, 2021 @ 4:00 am

My understanding of SQL Server serializable is that all SELECTs have an implicit HOLDLOCK. Therefore your serialazable solution cannot cause a deadlock. The first SELECT locks the rows, the procedure will continue only when the lock is acquired by the current transaction.

Ironically, in a realistic production environment, I’d expect that to be the only transaction that can’t cause deadlocks 🙂

Comment by Federico Razzoli — July 31, 2021 @ 10:49 am

Hi Federico,

Transactions using the Serializable isolation level can absolutely participate in deadlocks. In fact in my experience in practice they are often more likely to participate in deadlocks.

I’m trying, but I can’t understand where your misunderstanding might come from.

Comment by Michael J. Swart — July 31, 2021 @ 1:35 pm

[…] implement my test UPSERT procedure the way I’m supposed to like […]

Pingback by UPSERT Requires a Unique Index | Michael J. Swart — March 18, 2022 @ 10:11 am

[…] raw queries without an ORM then you could write a custom procedure and pass required parameters. HERE is a good write up on how that is done in MSSQL under high concurrency. You could use this as a […]

Pingback by Update SQL database registers based on JSON – SQL — May 12, 2022 @ 1:55 am

[…] Here is a useful article by Michael J. Swart on the matter, which covers different patterns and antipatterns for implementing UPSERT in SQL Server: https://michaeljswart.com/2017/07/sql-server-upsert-patterns-and-antipatterns/ […]

Pingback by How to upsert (update or insert) in SQL Server 2005 - IT Tutorial Point — October 15, 2022 @ 5:04 am

[…] By the way, here’s a good read on the subject: https://michaeljswart.com/2017/07/sql-server-upsert-patterns-and-antipatterns/ […]

Pingback by Only inserting a row if it's not already there — October 26, 2022 @ 2:45 am

[…] By the way, here’s a good read on the subject: https://michaeljswart.com/2017/07/sql-server-upsert-patterns-and-antipatterns/ […]

Pingback by Only inserting a row if it's not already there - Design Corral — January 15, 2023 @ 5:00 pm

What about best solution for joined tables ? Table1 FK to Table2 PK. SP params include only properties ( no keys ).

Comment by John Grandy — January 17, 2023 @ 2:46 pm

@John

A sentence fragment. Another. Good device. Will be used more later.

Inserts apply to only one table. I don’t know enough about what you’re trying to do.

If you have a values you call properties that are meant to be inserted into a table and if a subset of those values/columns uniquely identify a row, then those columns are a candidate key (whether its defined as a unique key or not).

If you don’t have a values that you can identify as a key (or a candidate key). Then you always INSERT and never UPDATE.

If you’re thinking to yourself “Never UPDATE? That can’t be right.” Then by answering why not, you’ll get your key.

Comment by Michael J. Swart — January 17, 2023 @ 2:59 pm

[…] Both of those things are wrong. Here’s a video about why: Where I most frequently do not see locking hints, or the use of an appropriate isolation level, is when developers still for some reason insist on using MERGE statements. […]

Pingback by The Art Of The SQL Server Stored Procedure: Locking Hints – Darling Data — December 18, 2023 @ 10:21 am