I was at the MVP Global Summit last week in Redmond, Washington. I was excited to go because I hadn’t been to the summit since 2014. Since I was travelling to the west coast anyway, I applied to speak at SQL Saturday Victoria the weekend before and I got accepted. Then, I expanded my trip to include a visit to the D2L office near Vancouver making it a week and a half tour of the West coast.

The bonus for me is that I got to visit British Columbia. I’ve spent my whole life in Canada, but I’ve never been to British Columbia and I’m so glad I went. That is one good looking province.

West Coast D2L

I started my trip by visiting D2L first.

Everyone single person there was awesome. D2L has a great work culture and the Vancouver office was no different. They treated me like family.

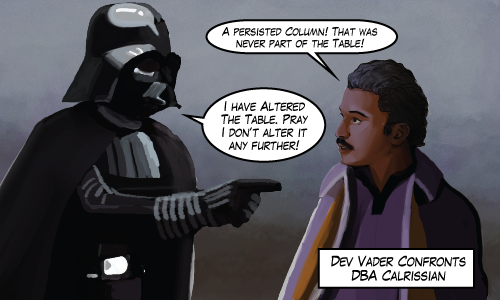

On the first day, we did a Q&A on anything database related. There were some good questions and I get the feeling that some of the answers were surprising. For example, I don’t advocate for stored procedures over inline SQL.

At the end of the week, I got to practice my session that I prepared for SQL Saturday Victoria in front of my colleagues. Now that was interesting. I wrote the talk for a general audience. It’s always useful to imagine an audience member when writing a talk. I imagined someone who wanted to learn more about databases and chose to give up their Saturday to do it. This imaginary audience member chose to come to a talk called “100 Percent Online Migrations” and presumably were interested in schema migrations. So when I gave the talk to my D2L colleagues, it was interesting to be able to get into specifics because I know the business challenges we’re all dealing with. The Vancouver office gave awesome feedback and they didn’t heckle me too much.

Other sights included a pikachu car, a build-is-broken light (just like home!) and an impromptu tour of the best coffee roaster in Richmond, BC

Before I left for Victoria, I got to spend a little time in downtown Vancouver

Downtown Vancouver

Even though I didn’t have a lot of time in downtown Vancouver, I did get to snap a couple photos before the sun went down. Mostly in and around Gastown.

Gassy Jack, Steam Clock, Vancouver Street

Another thing I noticed is that no one seems to smoke tobacco any more.

Before I left, I did manage to find the alley that Bastian ran down when he was chased by bullies in the Neverending Story. But the alley hasn’t aged well. It didn’t look like luck-dragons frequented that place any more so I didn’t take a picture.

Then it was time to head to SQL Saturday Victoria

Ferry to Victoria

I spent Friday on busses and ferries. Long trips in Ontario are dull. If you’ve seen one corn field, you’ve seen them all. But in British Columbia, the passing scenery is beautiful. Here’s a timelapse video of part of the ferry ride to Swartz Bay (not Swart’s bay).

SQL Saturday Victoria

SQL Saturday in Victoria was great. I was relaxed, and the talk (I thought) went really really well.

Randolph tries not to heckle Michael

I wasn’t super-thrilled with the turn-out. There were about ten people there (five were fellow speakers). And I have an uncomfortably long streak of having someone fall asleep during my talks. I know I’m not the most dynamic speaker but it’s starting to get on my nerves. That streak remains unbroken thanks in part to Erland’s jetlag.

But it remained a special SQL Saturday for me. I discovered that the venue was Camosun College in Victoria. Not only is Camosun College a client of D2L, but they’ve got a beautiful campus. Fun fact, spring has already arrived on campus there:

Spring!

Thanks to Janice, Scott and everyone else who ran a very very successful event.

On Sunday, Randolph, Mike and I waited in line for breakfast and walked around Victoria until the evening when we took a boat to meet Angela in Seattle, then on to Bellevue just in time for the MVP Global Summit in Redmond!

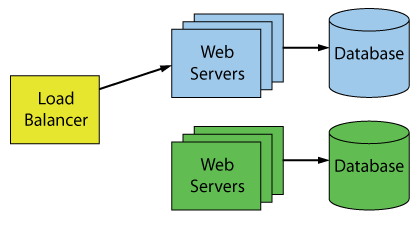

The 2018 MVP Global Summit

It’s been a while since I attended the MVP Summit. And this one was a good one. Everyone behaved for the most part. In past years, I remember a lot of people looking gift horses in the mouth. The feedback from MVPs to Microsoft sometimes took the form of angry complaints. Even if it was about something they didn’t know existed until that day. There was much less of that this year.

Personally I got to give feedback about some SQL Server features, and I got to hear about new stuff coming up. I’m always very grateful for Microsoft for putting on this event. I returned home with a lot more blue stuff than I started with.

What does this photobooth do?

(Special thanks to Scott, Mike, Angela and Randolph for making this trip extra fun. You’re the best)