Something New For Me

I’m relatively new to SQL Server Integration Services (SSIS). And so I want to explain how I solved a problem and invite Business Intelligence (BI) experts (and wannabe experts) to comment or point out things that I missed.

A Short Description of the Problem

So here’s a short description of the problem I’m trying to solve. It involves populating a dimension table. I want a data flow transformation which removes duplicate key values. In other words, every time I come across a new key value for a dimension, I want to pass through that entire row (not just the key values). This is kind of like a cross between T-SQL’s DISTINCT and FIRST_VALUE.

I found out recently that people have treated this topic before:

But because of my bad luck, I only found these posts after I muddled through a solution. But I was surprised to find out my solution had some interesting differences.

The Setup

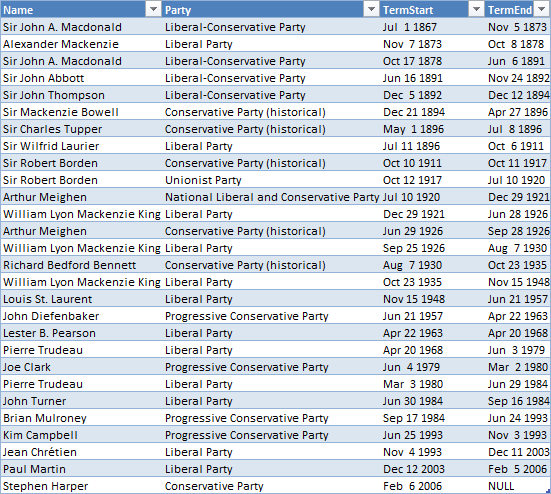

First I’ll explain my situation. Say I’ve got a flat file containing data about Prime Ministers:

My main goal is to use this data to populate a data warehouse. That data warehouse has a schema like this:

This example is just for illustration. In real life, the number of fact rows is very large, and the number of dimension rows is relatively small. The time dimension will be pre-populated, but I want to load the other dimensions using values from the flat file. So that means I plan to process the data twice: The first time will be to load these dimensions, and the second time will be to load the fact table. When I process the data for dimensions, I :

- Use a lookup transform to find whether that dimension already exists in the warehouse

- Use the No Match Output to identify dimension rows I haven’t seen before. Those rows will be used to load the dimension. (i.e. I’m treating the dimension like a Never-Changing-Dimension rather than a Slowly Changing Dimension.)

The problem is that these unmatched rows can be duplicated and I don’t want those duplicate dimensions rows getting into the dimension table.

Things I considered:

- I considered using a staging table on the DB side. But that required too much space, the amount of space needed was comparable to the fact table, not the dimension table.

- I also considered inserting into dimensions a row-at-a-time using the MERGE statement on the database side. But I didn’t consider that for long. The performance with that strategy was so unacceptable.

- I could have used a sort/aggregate, but that was a blocking transformation and I really didn’t need the data sorted.

What I ended up with

I created a script transform which seems to do the trick for what I want. My Data Flow task looks like this:

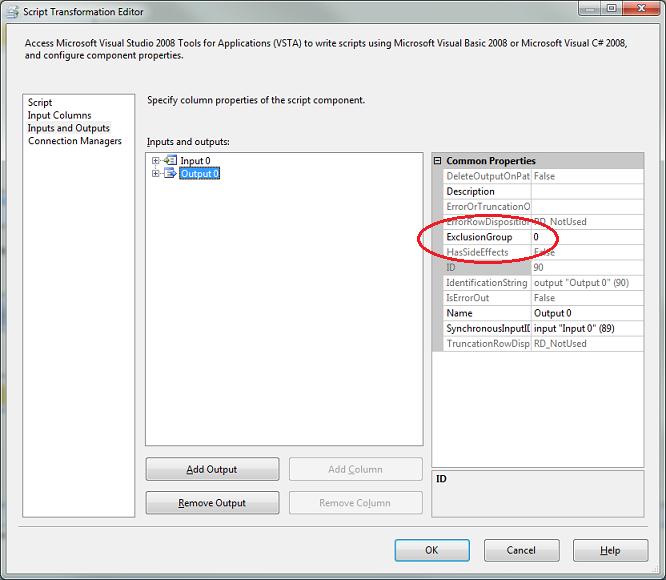

In those script transforms you see up there, I told SSIS that each script will be used to filter rows out or let them pass through. I did that by setting ExclusionGroup to 1 (by default, it’s normally 0). Here’s where that’s done:

Then in the script, I created a list of seen dimensions. And I let rows pass through if they haven’t yet been seen. The script simply looks like this:

private HashSet<string> seenParty = new HashSet<string>(); public override void Input0_ProcessInputRow(Input0Buffer Row) { if (Row.Party_IsNull) return; if (!seenParty.Contains(Row.Party)) { seenParty.Add(Row.Party); Row.DirectRowToOutput0(); } } |

Update October 9, 2013: And the seenParty variable should be defined as a HashSet. (See Abacus’s comments)

Logically it behaves exactly the same as the sort transformation (as explained in “Dead Easy Duplicate Key Removal” by Todd McDermid). Except for these things:

- It seems to perform fast

- It’s not a blocking transformation

- But you don’t get all that for nothing. What we’re not getting with this method is that the rows aren’t sorted. In most cases, that doesn’t matter.

- It takes some memory, so you have to be aware of that. Ask yourself, how well can all unseen dimension keys fit in memory?

Some questions for you BI guys

And here’s where I’d like you to chime in.

- What’s the most common approach to this problem?

- I get the feeling that I could use the Cache Transform for this technique. But I haven’t tried it.

- Are there any new features in SQL 2012 that help?

- Am I re-inventing the wheel here?

- Am I missing anything else that should be obvious?