Something New For Me

I’m relatively new to SQL Server Integration Services (SSIS). And so I want to explain how I solved a problem and invite Business Intelligence (BI) experts (and wannabe experts) to comment or point out things that I missed.

A Short Description of the Problem

So here’s a short description of the problem I’m trying to solve. It involves populating a dimension table. I want a data flow transformation which removes duplicate key values. In other words, every time I come across a new key value for a dimension, I want to pass through that entire row (not just the key values). This is kind of like a cross between T-SQL’s DISTINCT and FIRST_VALUE.

I found out recently that people have treated this topic before:

But because of my bad luck, I only found these posts after I muddled through a solution. But I was surprised to find out my solution had some interesting differences.

The Setup

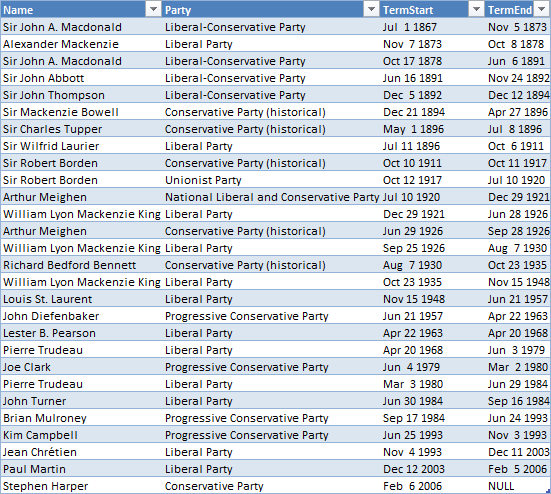

First I’ll explain my situation. Say I’ve got a flat file containing data about Prime Ministers:

My main goal is to use this data to populate a data warehouse. That data warehouse has a schema like this:

This example is just for illustration. In real life, the number of fact rows is very large, and the number of dimension rows is relatively small. The time dimension will be pre-populated, but I want to load the other dimensions using values from the flat file. So that means I plan to process the data twice: The first time will be to load these dimensions, and the second time will be to load the fact table. When I process the data for dimensions, I :

- Use a lookup transform to find whether that dimension already exists in the warehouse

- Use the No Match Output to identify dimension rows I haven’t seen before. Those rows will be used to load the dimension. (i.e. I’m treating the dimension like a Never-Changing-Dimension rather than a Slowly Changing Dimension.)

The problem is that these unmatched rows can be duplicated and I don’t want those duplicate dimensions rows getting into the dimension table.

Things I considered:

- I considered using a staging table on the DB side. But that required too much space, the amount of space needed was comparable to the fact table, not the dimension table.

- I also considered inserting into dimensions a row-at-a-time using the MERGE statement on the database side. But I didn’t consider that for long. The performance with that strategy was so unacceptable.

- I could have used a sort/aggregate, but that was a blocking transformation and I really didn’t need the data sorted.

What I ended up with

I created a script transform which seems to do the trick for what I want. My Data Flow task looks like this:

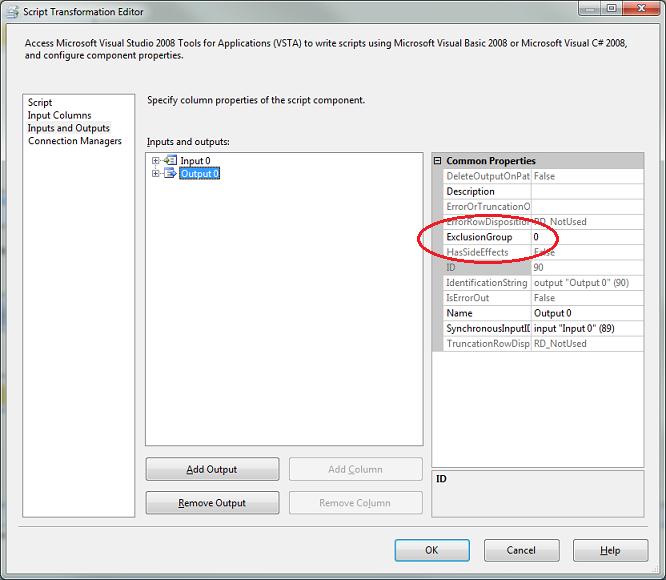

In those script transforms you see up there, I told SSIS that each script will be used to filter rows out or let them pass through. I did that by setting ExclusionGroup to 1 (by default, it’s normally 0). Here’s where that’s done:

Then in the script, I created a list of seen dimensions. And I let rows pass through if they haven’t yet been seen. The script simply looks like this:

private HashSet<string> seenParty = new HashSet<string>(); public override void Input0_ProcessInputRow(Input0Buffer Row) { if (Row.Party_IsNull) return; if (!seenParty.Contains(Row.Party)) { seenParty.Add(Row.Party); Row.DirectRowToOutput0(); } } |

Update October 9, 2013: And the seenParty variable should be defined as a HashSet. (See Abacus’s comments)

Logically it behaves exactly the same as the sort transformation (as explained in “Dead Easy Duplicate Key Removal” by Todd McDermid). Except for these things:

- It seems to perform fast

- It’s not a blocking transformation

- But you don’t get all that for nothing. What we’re not getting with this method is that the rows aren’t sorted. In most cases, that doesn’t matter.

- It takes some memory, so you have to be aware of that. Ask yourself, how well can all unseen dimension keys fit in memory?

Some questions for you BI guys

And here’s where I’d like you to chime in.

- What’s the most common approach to this problem?

- I get the feeling that I could use the Cache Transform for this technique. But I haven’t tried it.

- Are there any new features in SQL 2012 that help?

- Am I re-inventing the wheel here?

- Am I missing anything else that should be obvious?

Hi Michael,

Short answer – seems like a very nice solution to me. In answer to your Qs:

1. What’s the most common approach to this problem?

JT: Probably a Sort transform although as you correctly identify this does have issues

2. I get the feeling that I could use the Cache Transform for this technique. But I haven’t tried it.

JT: I just read at Cache Transform – http://msdn.microsoft.com/en-us/library/bb895264.aspx that “The Cache Transform writes only unique rows to the Cache connection manager.” which was news to me. However, I don;’t think tsi of use to you here as in order to use the cache you need another Dataflow – which kinda defeats the object.

3. Are there any new features in SQL 2012 that help?

JT: Not that I know of

4. Am I re-inventing the wheel here?

JT: Nope, I think this is a pretty sweet solution.

5. Am I missing anything else that should be obvious?

JT: Some (not me) might say that you should make it into a custom component. Wouldn’t worry about that too much tho.

Just on these points:

-It seems to perform fast

-It’s not a blocking transformation

Its fast *because* its not a blocking transform, so arguably these two bulelt points are bsaically saying the same thing.

Great post!

JT

Comment by Jamie Thomson — February 2, 2012 @ 8:46 am

Thanks Jamie! That’s very encouraging.

Every apprentice needs a mentor and the SQL community seems to fill that role for me.

Thanks again.

Comment by Michael J. Swart — February 2, 2012 @ 8:50 am

Out of interest, what is the type of “seenParty”? Is it just an Array?

Comment by Jamie Thomson — February 2, 2012 @ 9:16 am

I used List<string>,

Maybe SortedList would probably be the better choice. I don’t know too much about the guts of those collections but SeenParty.Keys.Contains() function would perform better.

Comment by Michael J. Swart — February 2, 2012 @ 9:30 am

I use the same approach for de-duplicating rows when processing late arriving dimension values on large fact tables. It works really well, and is easy to set up.

Comment by John Welch — February 2, 2012 @ 9:49 am

1. I dunno, check Thomas LaRock’s tweet with link on How to Count all the Elephants in Africa 🙂

2. I dunno, I like Jamie’s answer; always a safe bet 😉

3. 2012? What… a new version… where have I been? – I don’t think so either

4. Everyone needs a new wheel once in awhile, custom with lower role resistance, nice white wall and good handling…

5. As I thought of things, I re-read your post, each time pondering.

Do you have a great number or dimensions in your real data set?

Is your original dataset in an RDBMS that you could issue a DISTINCT on?

Would a sorted de-duped list work quickly with an SSIS Merge Join? I seem to remember Matt talking about some patterns to use for Dimensional load based on the size and shape of the incomming and the destination dimension tables — good for general rules of thumb.

I would likely not have cared too much about the blocking. In fact I’d like to see a solution without a script.

Comment by Scott Stauffer — February 2, 2012 @ 10:37 am

“Do you have a great number or dimensions in your real data set?”

I meant to say “a great number of dimensional _members_ in your real data set”

“I would likely not have cared too much about the blocking.”

I meant to say “I would likely not have cared too much about the blocking _in this cirumstance_”

— Wow, I need a full time editor! 🙂

Comment by Scott Stauffer — February 2, 2012 @ 10:44 am

Hi Scott, thanks for the feedback.

Comment by Michael J. Swart — February 2, 2012 @ 11:06 am

The lookup will only know about them if you have it in no-cache mode. Even then its still possible for records to arrive into the destination *after* a later row with matching key arrives at the lookup – for that reason I can’t see a reason that that pattern should ever be used. Avoid.

Comment by Jamie Thomson — February 2, 2012 @ 11:21 am

Good to know Jamie, so its use will strictly be to filter out existing dimension rows during incremental loads.

Comment by Michael J. Swart — February 2, 2012 @ 11:24 am

[…] Removing Duplicate Dimension Rows in SSIS- Wandering into new territory but with confidence and flair, this week Michael J. Swart (Blog|Twitter) gives us some SSIS goodness. […]

Pingback by Something for the Weekend – SQL Server Links 03/02/12 — February 3, 2012 @ 6:03 am

How about inserting into a view with an INSTEAD OF trigger?

Comment by Bob — February 7, 2012 @ 1:43 pm

That’s an interesting idea that I never considered. I have a couple questions:

* What does a trigger on a view get us over a trigger on the dimension table?

* Wouldn’t a large amount of data get sent to the datawarehouse? I believe performance would be comparable to the MERGE statement I mentioned above.

Comment by Michael J. Swart — February 7, 2012 @ 1:54 pm

Hi Michael,

Am a regular here on this your site. I searched Google for this subject and it took me here.

I have over 6million records which I want to remove duplicate from. Had tried “Dead Easy Duplicate Key Removal”, Yes it took an hour. I want something faster, so I tried your example, alas it’s taking longer time, 1 million records for 2 hours. Is there anything am missing?

See my script below

Really I don’t want to go block transformation way.

Thanks

Comment by Abacus — October 10, 2013 @ 9:28 am

Wow, 2 hours for 1 million rows is too long. And I’m not sure what you could be missing.

I do have one guess, and it’s about memory: You have 6 million rows, but roughly how many distinct account numbers are there? If there are say 5.9 million distinct account numbers, then that has to fit in memory and the list won’t well at all. I guess you could say that this solution works only when the number of distinct account numbers is small.

If the number of distinct account numbers is large, then, you might consider running things in batches. The filter existing lookup may help in this case and keep the List small.

Now, if it’s not memory, I can’t guess what the problem might be without more info. If you do crack this one Abacus, be sure to report back what you found.

and good luck.

Comment by Michael J. Swart — October 10, 2013 @ 9:43 am

Thanks my Michael,

Digging my search further, I came across http://stackoverflow.com/questions/823860/c-listt-contains-too-slow. I used HashSet and see my definition below

It too 5 minutes. Great result.

Thanks.

Comment by Abacus — October 10, 2013 @ 10:58 am

That is awesome! I’ll update the post for others.

Comment by Michael J. Swart — October 10, 2013 @ 11:01 am

Hey Michael,

Came across this post while Googling for another SSIS challenge I’m running into but I figured I’d drop a note to let you know what I’ve been doing to avoid processing the data twice to first create the dimensions and then the facts. Basically, I’m doing this neat trick – http://blogs.msdn.com/b/sqlcat/archive/2009/05/13/assigning-surrogate-keys-to-early-arriving-facts-using-integration-services.aspx

While processing your data, do a full cache lookup to try getting the dimension’s surrogate key, direct any non-matched rows to a second partial cache lookup where you create the missing dimension members, and then union all the two streams back together.

Comment by Aaron Cooper — December 20, 2013 @ 9:51 pm