Devops, Agile and Continuous Delivery

Sometimes I get asked about work and our software development practices. Often these questions use words like agile, devops, or continuous delivery as in “Are you agile?” or “Do you do continuous delivery?”. But these questions rarely have yes or no answers. I always want to answer “It’s a work in progress”.

One of the things I like best about the PASS Summit is the opportunity to talk to people to find out how they do development. After speaking with others, it seems like everyone’s processes are always going to be works-in-progress. But I’ve come to realize that at D2L, we’re pretty far along. Here are just some of the things we do:

Things We Do

- Deployments to production are scheduled and almost completely automatic. This lets us deploy way more often than we used to.

- All code – including procedures and table definitions – are checked in.

- Automatic tests are run on every pull request and merge.

- Safety is a huge concern. We use feature flags and other techniques, but it remains difficult to maintain large complicated systems safely.

- We use blue-green deployments for zero downtime.

- The database layer is an exception to this blue-green technique. So it’s very important to be able to rollback any changes or configuration.

Sardines and Whales

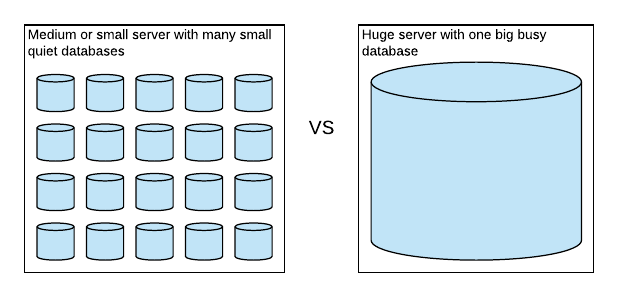

This means we must also support thousands of copies of our main database. They’re used for client sites, test sites, qa sites, or whatever. So that leads to a variety of server configurations that I refer to as sardines and whales:

Look at those sardines. They’re quite happy where they are. The server can handle up to a thousand databases when there’s almost no activity.

But that whale is on a huge server and is extremely busy. Because of the high volume of transactions, we sometimes encounter tempdb contention due to our frequent use of table valued parameters. One technique I’ve been looking forward to evaluating is using memory optimized table types.

Maybe We Can Use In Memory OLTP?

I’m actually not very interested in memory optimized tables. I’m much more interested in the memory optimized table types. Those types can be used for table valued parameters. I can’t tell you how excited I was that it might solve my tempdb pet peeve.

But our dreams for the feature died

We’re leaving the feature behind for a few reasons. There’s an assumption we relied on for the sardine servers: Databases that contain no data and serve no activity should not require significant resources like disk space or memory. However, when we turned on In Memory OLTP by adding the filegroup for the memory-optimized data, we found that the database began consuming memory and disk (about 2 gigabytes of disk per database). This required extra resources for the sardine servers. So for example, 1000 databases * 2Gb = 2Tb for a server that should be empty.

Another reason is that checkpoints began to take longer. Checkpoints are not guaranteed to be quick, but on small systems they take a while which impacts some of our Continuous Integration workflows.

At the PASS Summit, I talked to a Hekaton expert panel. I also talked to a couple people in the Microsoft SQL Server clinic about some of my issues. They all recommended that we upgrade to SQL Server 2016 (which we can’t yet). Maybe I didn’t phrase my questions well, but I didn’t come away with any useful strategy to pursue.

I later talked to a Speaker Idol contestant Brian Carrig (@briancarrig) after hearing him talk briefly about his experiences with In Memory OLTP. He mentioned his own hard-fought lessons with In Memory OLTP including some uncomfortable outages.

The final nail in the coffin, as it were, is that once you turn on In Memory OLTP, you can’t turn it off. Once the In Memory OLTP filegroup is added, it’s there for good. Like I said, safety is a huge concern for us so we’re giving up on the feature for now.

Resurrecting the Feature?

The feature was designed for whales, not sardines. Maybe someday we will try to get those sardine servers to not fail with In Memory OLTP. Until then, the feature goes back on the shelf.

Funny timing on this. We literally have a call open with MS right now because we have a database with a half-terabyte log file that won’t shrink, despite the DB being in simple mode. Appears to be IMOLTP related.

I’m starting to feel about it like I feel about Service Broker (which we have in place in several places, as well as on every server by dint of using Event Notifications): when it works it’s amazing, and when it doesn’t you question your decision to ever start using it.

Comment by mbourgon — November 10, 2017 @ 4:13 pm

In-Memory OLTP is certainly not for everyone but it can solve some very real problems and when it does it can be positively mind blowing. PAGELATCH_EX contention on the system base tables in tempdb was a very real problem for us until we started using memory optimized table types (and in some cases, actual memory optimized tables utilizing row level security and constraints to mimic the behavior of a temporary table).

Comment by Brian Carrig — November 10, 2017 @ 4:59 pm

[…] Michael J. Swart relays a cautionary table around using In-Memory OLTP: […]

Pingback by The Difficulties Of Memory-Optimized Tables – Curated SQL — November 13, 2017 @ 8:01 am

@mbourgon

Log files that won’t shrink despite simple mode! That’s the stuff of nightmares.

I did want this post to come out on Halloween (you might be able to tell by the spooky graveyard). But I postponed the post to include stuff I learned at Summit.

Comment by Michael J. Swart — November 13, 2017 @ 9:09 am

@Brian

PAGELATCH contention in tempdb is my nemesis. It is a real problem for us too. I really hope there’s a scenario that allows us to use memory-optimized tvps. The memory-optimized tvps don’t even use the memory-optimized filegroup do they?

In my ideal world, Microsoft could split off Hekaton table types from the rest of Hekaton and I wouldn’t need that stupid filegroup in the first place.

Comment by Michael J. Swart — November 13, 2017 @ 9:11 am

I previously had a similar issue as “half-terabyte log file that won’t shrink, despite the DB being in simple mode”. My fix was set mode to fully logged, shrink file then reset to simple. Not sure if this fix would work in your scenario.

Comment by Dusky — November 19, 2017 @ 5:20 am

>> In my ideal world, Microsoft could split off Hekaton table types from the rest of Hekaton and I wouldn’t need that stupid filegroup in the first place.

I second that. It would be good to use memory-optimized table variables without having to create an IMO Filegroup.

No grow on space, no affecting checkpoints, no log truncation issues. Please request a DCR (Design Change Request).

Comment by Alejandro Hernandez — February 2, 2018 @ 4:22 pm

My conclusion is that In-Memory OLTP does not support migrations in most cases. It is maybe good for new projects where you expect many transactions, but migrating an existing database is not properly supported.

Furthermore, Microsoft documentation states:

You cannot issue an ALTER TABLE statement to convert a disk-based table into a memory-optimized table. Instead, you must use a more manual set of steps

• Suspend application activity.

• Take a full backup.

• Rename your disk-based table.

• Issue a CREATE TABLE statement to create your new memory-optimized table.

• INSERT INTO your memory-optimized table with a sub-SELECT from the disk-based table.

• DROP your disk-based table.

• Take another full backup.

• Resume application activity

Successfully migrating a table to In-Memory OLTP is a lot of work. Imagine that you have to use this recipe for converting hundreds of tables. That does not sound good!

Furthermore there is an issue with CHECKDB(CHECKTABLE).

DBCC CHECKTABLE (‘[HumanResources].[Employee]’)

Will return

“Msg 5296, Level 16, State 1, Line 1

Object ID 1141579105 (object ‘Employee’): The operation is not supported with

memory optimized tables. This object has been skipped and will not be processed.”

If you decide to migrate a small number of tables, you will get another unpleasant surprise. It’s not possible to establish a relationship between an in-memory table and a disk-based table. Foreign keys between in-memory and disk-based tables are not supported. Basically, you will end up with a database without referential integrity. Furthermore, if you would like to use triggers to establish referential integrity, you get another surprise: there are no possibilities to access disk-based tables in natively compiled triggers, and only that kind of trigger (natively compiled) is allowed on In-Memory optimized tables.

Although Microsoft has invested a lot of effort in In-Memory OLTP technology, currently the functionality is not satisfactory. We cannot migrate databases without losing referential integrity or do a lot of manual work.

After the migration, we have to do very hard work to rewrite the apps. Finally, the result is far, far away from 100x. You will get the unstable application and many new difficulties.

Comment by Darko Martinovic — May 17, 2018 @ 2:50 am

Hi Darko,

In Memory OLTP is a collection of technologies.

You’re talking about the first item, Memory-optimized tables, which has all the drawbacks you mentioned.

I was talking about the last item, memory-optimized table types.

For me, migration would not be my issue. I would be able to use memory-optimized table types without the need for application downtime.

Comment by Michael J. Swart — May 17, 2018 @ 9:26 am

Good post.

Please let me know how checkpoint gets slower for imoltp.

Comment by Maneesh ar — February 19, 2019 @ 9:43 am

Michael :-

As always point on.

You are always needfully clear and precise.

I like the rigor you use for evaluating technology and ensuring that you just don’t use it, but that you use it well.

And, that there is a viable back out.

Daniel Adeniji

Comment by Daniel Adeniji — October 8, 2019 @ 8:24 pm

Showed up here because we are dealing with a SolarWinds database that is in Simple mode, but the log file is continuously growing.

There is a MEMORY_OPTIMIZED_DATA_FILEGROUP in this database that has no db files associated with it.

DBCC SHRINKFILE does not work.

CHECKPOINT will bring the log file usage down, but has at least once resulted in a fatal error, leaving the database in SUSPECT mode.

The error from executing CHECKPOINT was this:

ERROR] Database ID: [8] ‘databasename’. Failed to load XTP checkpoint. Error code: 0x82000018. (e:\b\s3\sources\sql\ntdbms\hekaton\sqlhost\sqlmin\hkhostdb.cpp : 5367 – ‘HkHostRecoverDatabaseHelper::ReportAndRaiseFailure’)

Comment by TheFrustratedDba — March 26, 2020 @ 7:02 pm