Last week I was asked to troubleshoot some deadlocks in production. I was surprised to find out that parameter sniffing was one of the causes. I describe the investigation below.

Parameter Sniffing

SQL Server does this neat trick when you give it a query with parameters. The query optimizer will take the parameter values into account when making cardinality estimates. It finds the best query plan it can for these values. This is called parameter sniffing.

But parameter sniffing combined with query plan caching means that SQL Server seems to only care about parameter values the first time it sees a query. So ideally the first parameter values should be typical parameter values.

When people talk about parameter sniffing problems, it’s usually because this assumption doesn’t hold. Either the query was compiled with atypical values, or maybe the data has an uneven distribution (meaning that “typical” parameter values don’t exist).

But remember that…

The Problem

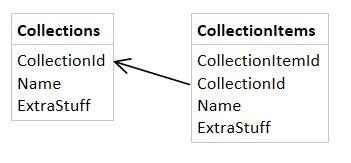

The problem I saw in production involved some fairly typical looking tables. They looked something like this:

This is how they were defined.

create table dbo.Collections ( CollectionId bigint identity primary key, Name nvarchar(20) default ('--unnamed--') not null, Extrastuff char(100) not null default ('') ); create table dbo.CollectionItems ( CollectionItemId bigint identity primary key, CollectionId bigint not null references dbo.Collections(CollectionId), Name nvarchar(20) default ('--unnamed--') not null, ExtraStuff char(100) not null default ('') ); create index ix_CollectionItems on dbo.CollectionItems(CollectionId); |

The errors we were getting were deadlock errors and the deadlock graphs we collected were always the same. They looked something like this:

See that procedure called s_CopyCollection? It was defined like this:

create procedure dbo.s_CopyCollection @CollectionId bigint as set nocount on; declare @NewCollectionId bigint; if @CollectionId = 0 return; if not exists (select 1 from dbo.Collections where CollectionId = @CollectionId) return; set xact_abort on; begin tran; insert dbo.Collections (Name, Extrastuff) select Name, ExtraStuff from dbo.Collections where CollectionId = @CollectionId; set @NewCollectionId = SCOPE_IDENTITY(); insert dbo.CollectionItems (CollectionId, Name, ExtraStuff) select @NewCollectionId, Name, ExtraStuff from dbo.CollectionItems where CollectionId = @CollectionId; commit; |

It’s a pretty standard copy procedure right? Notice that this procedure exits early if @CollectionId = 0. That’s because 0 is used to indicate that the collection is in the “recycle bin”. And in practice, there can be many recycled collections.

Some Digging

I began by reproducing the problem on my local machine. I used this method to generate concurrent activity. But I couldn’t reproduce it! The procedure performed well and had no concurrency issues at all.

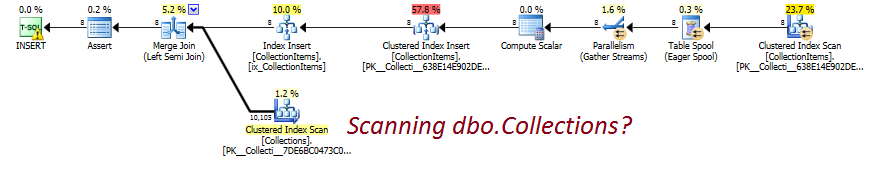

This meant more digging. I looked at the procedures behavior in production and saw that they were performing abysmally. So I grabbed the query plan from prod and here’s what that second insert statement looked like:

This statement inserts into CollectionItems but it was scanning Collections. That was a little confusing. I knew that the insert needed to check for the existence of a row Collections in order to enforce the foreign key, but I didn’t think it had to scan the whole table. Compare that to what I was seeing on my local database:

I looked at the compilation parameters (SQL Sentry Plan Explorer makes this easy) of the plan seen in production and saw that the plan was compiled with @CollectionId = 0. In this case, the assumption about parameter sniffing I mentioned earlier (that the compilation parameters should be typical parameters) did not hold.

This procedure was performing poorly in production (increasing the likelihood of overlapping executions times) but also, each one was taking shared locks on the whole Collections table right after having inserted into it. The whole procedure uses an explicit transaction and that’s a recipe for deadlocks.

Doing Something About It

Here are things I considered and some actions I took. My main goal was to avoid the bad plan shown above.

- Never call the procedure with

@CollectionId = 0. The early-exit in the procedure was not enough to avoid bad query plans. If the procedure never gets called with@CollectionId = 0, then SQL Server can never sniff the value0. - I began to consider query hints. Normally I avoid them because I don’t like telling SQL Server “I know better than you”. But in this case I did. So I began to consider hints like:

OPTIMIZE FOR (@CollectionId UNKNOWN). - I asked some experts. I know Paul White and Aaron Bertrand like to hang out at SQLPerformance.com. So I asked my question there. It’s a good site which is slightly better than dba.stackexchange when you want to ask about query plans.

- Aaron Bertrand recommended

OPTION (RECOMPILE). A fine option. I didn’t really mind the impact of the recompiles, but I like keeping query plans in cache when I can, just for reporting purposes (I can’t wait for the upcoming Query Store feature) - Paul White recommended a

LOOP JOINhint on the insert query. That makes theINSERTquery look like this:insert dbo.CollectionItems (CollectionId, Name, ExtraStuff) select @NewCollectionId, Name, ExtraStuff from dbo.CollectionItems where CollectionId = @CollectionId option (loop join);

That was something new for me. I thought

LOOP JOINhints were only join hints, not query hints. - Paul White also mentioned some other options, a

FAST 1hint or a plan guide and he also suggestedOPTION (RECOMPILE).

So I stopped calling the procedure with @CollectionId = 0 and I used a query hint to guarantee the better plan shown above. The performance improved and the procedure was no longer vulnerable to inconsistent performance due to parameter sniffing.

In general, there seem to be only two ways to avoid deadlocks. The first way minimizes the chance that two queries are executing at the same time. The second way carefully coordinates the locks that are taken and the order they’re taken in. Most deadlock solutions boil down to one of these methods. I was happy with this solution because it did both.

Fantastic article, I’ve never seen this behaviour before. You must have been tearing your hair out.

It’s interesting to see all the options available. As I’m a DBA rather than a DBD I would have chosen the plan guide; it doesn’t require a new build (like cutting out @CollectionID = 0 code paths), it doesn’t require a new stored procedure with the hint passed back to the developers to huff and haw and stick into source control either.

On the other hand yeah the plan guide will break an application upgrade down the track unless it’s specifically catered for (dropped and recreated). Not to mention what it might cause down the track with an SQL Server upgrade… oh sure it’s in the documentation to check for them but few expect or do it.

Tough call.

Comment by Cody — March 5, 2015 @ 6:27 am

Hey Cody,

I’m out of hair to tear out, I’ve been doing this for a while and I’ve practiced some decent digging techniques which serve me pretty well.

Don’t get me wrong, I could easily double or triple the length of this article with things I tried that turned out to be dead ends. But the digging part took me about a day.

About the options. Specifically the plan guide. You’re absolutely right and those considerations went through my mind too. As a dev for a ISV, it’s easier for us to bake solutions into the product so that we don’t have to rely on deployment instructions. In our situation, new builds happen regularly whether I get my fix in or not. In terms of optimizing headaches-avoided, this was the best path for us.

Comment by Michael J. Swart — March 6, 2015 @ 4:33 pm

[…] When Parameter Sniffing Caused Deadlocks – Michael J. Swart (Blog|Twitter) […]

Pingback by (SFTW) SQL Server Links 13/03/15 - John Sansom — March 13, 2015 @ 4:46 am

I’m always preventing param sniffing when sp’s parameters are used in where clauses with surrogate local variables like this

declare @_CollectionId bigint

select @_CollectionId = @CollectionId

… and using @_CollectionId in the body of the sp. This has always worked great (since sql2000) and gets plans in cache which is important if base tables get in millions of rows or queries get complicated (lots of columns, lots of base tables).

CREATE PROC … WITH RECOMPILE is a viable option (since sql2000) when there are outliers e.g. in your case if half the rows in dbo.CollectionItems are for CollectionId = 0 then an index seek plan produced by local variables surrogates would be abysmal. So the sp will be fast, fast, fast and then suddenly slooow for a particular edge case, while WITH RECOMPILE it would be constantly average in speed.

Comment by wqweto — March 16, 2015 @ 1:42 pm

Hi wqweto,

The surrogate local variable method you mention has a more elegant syntax available to us since 2008, which is

OPTIMIZE FOR UNKOWN.OPTIMIZE FOR UNKOWNis exactly equivalent to what you described. It’s a fascinating lesson I explored in Is this query equivalent to SQL Server 2008’s OPTIMIZE FOR UNKNOWN?.I guess I’m writing my articles today with the assumption that readers are at version 2008 or later. If I alienate people who are running boxes at 2005 or earlier, I guess I can live with that.

I did consider the RECOMPILE hint, and as I mentioned in the article, I had my reasons for keeping query plans in the cache when I can.

Comment by Michael J. Swart — March 16, 2015 @ 2:28 pm

@Michael: My previous comment was more geared towards readers of your blog, sorry for sounding a bit “at level 100”.

Btw, did you consider manually serializing the transaction by moving existence check inside the transaction and UPDLOCK hinting it something like this

begin tran;

if not exists (select 1 from dbo.Collections WITH (UPDLOCK, ROWLOCK) where CollectionId = @CollectionId)

return;

…

Btw, was RCSI on?

Comment by wqweto — March 17, 2015 @ 11:02 am

Hi wqweto,

The manual serialization is a great trick. I actually use it sometimes myself and I wish I added it to my “things I considered” section. In this case I don’t like it as well as my “avoid parameter sniffing issues” fix because the fix I chose happened to include a performance improvement along with it.

RCSI was not on. One day I’d like to turn it on, but I need to be careful about it. At the moment, my company is wary of anything that uses tempdb more than it should.

Comment by Michael J. Swart — March 17, 2015 @ 11:44 am

Hi,

Could you not have simply removed the bad plan and execute the procedure with a id other than 0 for the first run. Admittedly the problem could return if the plan left the cache again and was compiled against a query with 0 as its id.

Comment by steve — March 18, 2015 @ 7:56 am

Hi Steve,

I could have. I wrote about sharpshooting query plans in the past. I mentioned there that this technique buys us time but is not a fix for the reason you mentioned; the problem could return if the query gets recompiled.

In our case, we’re a vendor with hundreds of installations of our software and although it was rare for us to have the compilation parameter value = 0, when we aggregate the clients the problem was common.

Comment by Michael J. Swart — March 18, 2015 @ 10:51 am

[…] https://michaeljswart.com/2015/02/when-parameter-sniffing-caused-deadlocks/: […]

Pingback by Deadlocks – Knee Deep In Data — February 28, 2018 @ 4:17 pm