1. Troubleshoot acute blocking problems

Sometimes it’s too late to prevent blocking. Blocking is happening now! And it’s up to you to fix it. It’s a high-stress situation you can find yourself in and it’s often useful to find out who’s blocking who. I’ve written a query that can indicate if there’s blocking and if so who’s blocking who. Here’s a link to that query.

Best case scenario is that you can quickly identify one query (such as a maintenance task) that is blocking other people. Stop the query (unblocking others) and then spend your time analyzing and fixing the rogue query.

2. Watch for blocked processes that occur less frequently.

Consider the scenario where performance on the database suffers occasionally, but it’s sporadic and it’s hard to catch the problem red-handed. I’ve found the blocked process report really useful. It’s a new event in SQL 2005 that you can trace through profiler or any other tracing utility you use.

A couple things you need to set up first:

sp_configure 'show advanced options', 1

RECONFIGURE WITH override

GO

sp_configure 'blocked process threshold', 10

RECONFIGURE WITH override

GO

In the code above, I’ve set the blocked processes threshold to 10. So if SQL Server detects more than 10 processes that are waiting because of blocked resources, it will fire a blocked process event. This event can be traced through profiler or by using sp_trace_* sprocs. SQL Server will then wait 10 seconds before deciding to issue another blocked process report. It is designed this way to make a very lightweight trace.

By digging into the blocked process reports you’ll find that the reports can tell a very good story about blocking on your server. You can often find problems before they’re noticed by an end user. You can see we’re moving closer to proactive and further from reactive.

3. Look at blocking from the object’s point of view

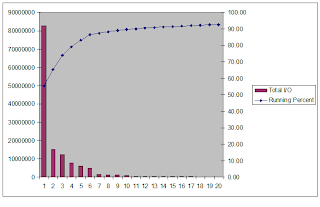

So there are no acute problems and the blocked process report hasn’t popped up in a long while. You’re done right? Not necessarily. There’s one last look into your server that can help you make adjustments to database design that will really make your db scalable. So far, up until now, we’ve looked at which processes are blocking which other processes. We can now shift our point of view to look at which tables (or indexes) are participating most often in row lock waits.

The following query comes from Colin Roberts.

SELECT OBJECT_NAME(s.OBJECT_ID) AS TableName

,ISNULL(i.name,'HEAP') AS IndexName

,CASE i.index_id

WHEN 0 THEN 'HEAP'

WHEN 1 THEN 'CLUS'

ELSE 'NC'

END AS IndexType

,row_lock_count, row_lock_wait_count

,[block %]=CAST (100.0 * row_lock_wait_count / (1 + row_lock_count) AS numeric(15,2))

, row_lock_wait_in_ms

, [avg row lock waits in ms]=CAST (1.0 * row_lock_wait_in_ms / (1 + row_lock_wait_count) AS numeric(15,2))

FROM sys.dm_db_index_operational_stats (DB_ID(), NULL, NULL, NULL) s JOIN sys.indexes i

ON i.OBJECT_ID = s.OBJECT_ID AND i.index_id = s.index_id

WHERE OBJECTPROPERTY(s.OBJECT_ID,'IsUserTable') = 1

ORDER BY row_lock_wait_count DESC

It identifies tables that have chronic problems with regards to locking (For acute problems see step 1 or 2 or take a snapshot and compare with differences).

< br />Say you identify a table that shows a lot of blocking. You can focus your analysis on the use of that table. Identify queries that write a lot to that table and read a lot from that table. Chances are that you’ll find many such queries. With this information, you can improve db design. Here’s what some solutions might look like:

- The columns in the table that are being updated are rarely read. Split out the updated column to its own table.

- The table being updated is being read often in the same sproc. Maybe it’s possible to update and read in the same statement.

- The table is very large and accessed frequently. Partition the table avoiding contention.

- Refactor the application to access/update the table differently

Good luck