The beginning of the school year is behind us and what a semester start! 2020 has been tough on many of us and I’m fortunate to work for a company whose services are in such high demand. In fact we’ve seen some scaling challenges like we’ve never seen before. I want to talk about some of them.

- Maximum Simultaneous User Connections

- In Memory OLTP Defeated Our Tempdb Problems

- Detect Excessive Spinlock Contention on SQL Server

At D2L, we’re the perfect candidate customer for In Memory OLTP features, but we’ve held off adopting those features for years. Our servers handle tons of super quick but super frequent queries and so we find ourselves trying to address the same scaling challenges we read about in Microsoft’s customer case studies.

But there’s only one In Memory feature in particular that I care about. It’s the Memory Optimized Table Types. Specifically, I’ve always wanted to use that feature to avoid tempdb object allocation contention. Recently I finally got my chance with a lot of success. So even though I could say I’m happy with In Memory features, I think it’s more accurate to say that I feel relieved at having finally squashed my tempdb issues.

The Trouble With Tempdb

We use table valued parameters with our procedures a lot (like thousands a second). We’re lucky that the table variables are not created on each execution, they’re cached. We rely heavily on the reduced overhead that this gives us. It’s for that reason we much prefer table variables over temp tables.

But when we crank up the demand, we can still run into catastrophic trouble. When tempdb contention hits us, throughput doesn’t just plateau, it drops hard. This kind of contention we see is like a kind of traffic jam where anyone who needs to use tempdb (i.e. everyone) has to wait for it. These tempdb traffic jams are rough. We even created a lighter version of sp_whoisactive that avoids tempdb issues for times like those.

I won’t go on too long about our troubles (I’ve written about tempdb issues a few times already: 1, 2, 3, 4, 5, 6, 7). The usual advice is to increase the number of tempdb data files. We were using 48 data files and really looking hard for other options.

SQL Server 2019 has some promising options. In TEMPDB – Files and Trace Flags and Updates, Oh My! Pam Lahoud points out how SQL Server can use all the PFS pages in the tempdb data files, not just the first available one. But we couldn’t move to 2019 that quickly. So we looked at Memory Optimized Table Types to help us.

Memory Optimized Table Types Can Help

Improving temp table and table variable performance using memory optimization tells us how. Our main goal is to avoid tempdb contention and memory optimized table variables don’t use tempdb at all. As long as we can be sure that the number of rows stored in these table variables is small, it’s all pros and no cons.

But it wasn’t easy for us to implement. In 2017, I wrote about Postponing Our Use Of In Memory OLTP. There were just some challenges that we couldn’t overcome. We’re not alone in struggling with the limitations of In Memory features. But our challenges weren’t the usual limitations that folks talk about and so they’re worth exploring.

The Challenge of Sardines and Whales

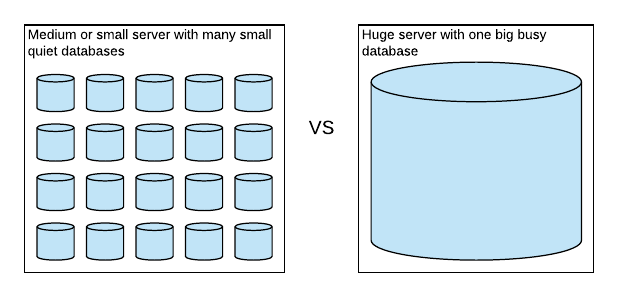

We have one product that we deploy to many clients. Each client gets their own database. The big ones (whales) have their own servers but the small ones (sardines) get grouped together.

So the overhead of enabling In Memory on all the sardines was going to cause issues. The In Memory OLTP filegroup requires up to 4GB of disk space which isn’t easy to handle with hundreds of sardines. So we’re left with this dilemma. We’d like to use In Memory on the biggest whales, but not on the sardines. We tackled that in two ways

- Decide that the choice to add the In Memory filegroup is configuration, not product. This still required some changes though. Our backup and restore processes needed to at least handle the new filegroups, but they couldn’t expect it.

- Add an exception to our processes that allow schema drift in the definition table types. Our plan was to manually alter the table types to be memory optimized, but only on the largest whales. Introducing schema drift is not ideal, but we made this choice deliberately.

This whole challenge could have been avoided if memory optimized table types didn’t require an In Memory OLTP file group. I get the sense that the memory optimized table types don’t actually use that folder because I noticed something interesting. SQL Server 2019 introduces memory optimized tempdb metadata tables without a memory optimized filegroup! How did they pull that off? I’m a bit jealous. I asked Pam Lahoud and it turns out that the In Memory filegroups are still required for memory optimized table types and will continue to be. It turns out that Microsoft can make certain assumptions about the tempdb metadata tables that they can’t with regular table types. 😟

Some Implementation Surprises

As we implemented our plan, we also encountered some interesting things during testing that might be useful for you if you’re considering In Memory features.

- The default directory for storing database files should point to a folder that exists on the database server and on any secondary nodes in the same availability group. So if the default location is

E:\SQLDatathen make sure there’s an E drive on every node. SQL Server will need to create anxtpfolder in there. - When adding the In Memory OLTP file group, the folder that contains it should also exist on all secondary nodes.

- In SQL Server 2014, I noticed that the addition of the memory optimized file group required up to 4 Gb of space. In SQL Server 2016, I see that that still happens, but the space isn’t taken until the first memory optimized table type I create. That’s also when the

xtpfolder gets created. - Adjusting the table types to be memory optimized was a challenge because we wanted the process to be online. I wrote about how we pulled that off earlier this week in How to Alter User Defined Table Types (Mostly) Online

Success!

Things worked out really really well for us. Our main goal was to avoid tempdb contention and we succeeded there. But there’s an additional performance boost. When you insert into a regular table variable, that data gets written to tempdb’s transaction log. But that’s not the case for memory optimized tables. So even though I really just care about avoiding contention, the boost in performance is significant and measurable and really nice.

In testing we were finally able to push a 96 CPU machine up to 100% CPU on every core and only then did throughput plateau. No tempdb contention in sight.

In production we also saw the same behavior and we were able to sustain over 200K batch requests per second. No tempdb contention in sight.

Those numbers are nice, tempdb contention has been such a thorn in my side for so long, it’s such a relief to squash that issue once and for all. I now get to focus on the next bottleneck and can leave tempdb contention in the past.

That’s great! We’ve also been battling tempdb contention for a long time now, but haven’t gone the in-memory route yet (there’s so much code to change!). I wonder, have you tried throwing hardware at it? As in putting tempdb of NVMe drives.

Comment by Alex Friedman — October 25, 2020 @ 9:24 am

Hey Alex,

Tempdb contention isn’t a hardware issue, it’s contention that happens in memory and so faster IO doesn’t help here. The contention is fighting over latches on PFS pages and so adding extra data files in tempdb just adds more PFS pages to be fought over and so less contention. Add more data files to tempdb first. That helps in the majority of cases.

Also, we didn’t need to change that much code. There’s two things that need to change. The definition of the table types. And the code that uses the table types. We didn’t have to change any code that uses the table types because we altered the table types without changing the name (see the link above about altering online)

And we actually didn’t alter all the table types. We looked at the tables types that were used the most and altered the top 8. (Look at the first comment on this link to see which procedures use tvps the most frequently https://michaeljswart.com/2015/08/avoid-frequent-use-of-tvps-with-wide-rows/)

Comment by Michael J. Swart — October 25, 2020 @ 9:52 am

You’re right that latches are in memory, but with a very large tempdb with many dozens of files, these many PFS pages need to be read into memory first too, right? Not 100% if they all stay in memory due to LRU after the initial warmup. But more importantly, I think that overall faster tempdb activity (due to faster disks) will lead to a less crowded tempdb, which reduces the contention over the metadata too.

About the code changes, yes, when it’s just the table type definition that’s great — and a very neat trick with the switch! However, when you have code using good old temp tables (which are normally better than table variables as we know), that does need changing a metric ton of code 🙂

Comment by Alex Friedman — October 26, 2020 @ 6:16 am

Hi Alex,

The trouble I was having is object allocation contention. Latch contention on the PFS pages. And fast disks certainly do not help.

It sounds like you’re encountering a different kind of contention (tempdb metadata contention) which is not what this article is about.

Pam Lahoud has an article describing improvements to that in Sql Server 2019. A link to that is here https://techcommunity.microsoft.com/t5/sql-server/tempdb-files-and-trace-flags-and-updates-oh-my/ba-p/385937

Comment by Michael J. Swart — October 26, 2020 @ 8:09 am

[…] Michael J. Swart uses In-Memory OLTP: […]

Pingback by Memory-Optimized Table Types to Avoid tempdb Contention – Curated SQL — October 26, 2020 @ 8:00 am

Thanks Michael for a very inspirational article. We are considering using In memory TVPs as well as table variables.Even if It is not the point of this article, but I am particularly interested in how all this affects memory consumption. After the execution of the procedure with In memory TVPs and the table variables, what happens to those objects in memory? Do they still remain in memory but without data? And will the existing empty objects be reused in the next procedure call, or will new ones be created? What should be the expectation of memory consumption if the same procedure is called frequently and concurrently, whereby both TVPs and table variables in that procedure are objects of max 1 MB size for instance?

Comment by Vladimir Djordjevic — July 3, 2021 @ 10:31 am