How to deploy schema changes without scheduled downtime

There’s increasing pressure to keep software services available all the time. But there’s also pressure to deploy improvements frequently. How many of us would love to reduce the duration of migration windows or better yet eliminate them entirely. It’s always been challenging to make changes safely without interrupting availability, especially database schema changes.

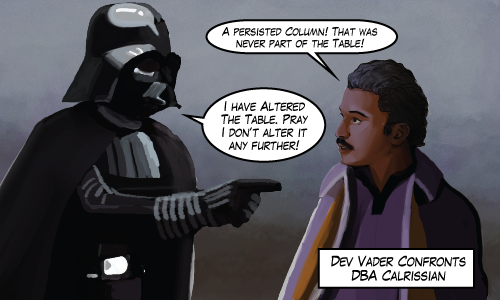

And when responsibilities are split between different groups – DBAs responsible for availability, developers responsible for delivering improvements – it causes some tension:

So I’m beginning a series describing the “Blue Green” continuous delivery technique. It’s a technique that really works well where I work, It helps us manage thousands of databases on hundreds of servers with monthly software updates and zero downtime.

Outline

Blue-Green

We use the Blue-Green deployment techniques described in Continuous Delivery by Humble and Farley and made popular by Martin Fowler in Blue Green Deployment. I’ll describe what the Blue-Green deployment technique is and how we use it.

We actually don’t follow the book perfectly. I’ll describe what we do differently, and why.

OLTP-Friendly

With some effort and creativity, we can break our database migrations into small chunks and deploy them while the application is still live.

Many changes to schema will lock tables for longer than we can tolerate. Often, the schema changes will need to take a brief SCH-M lock on certain objects and so this technique works best with OLTP workloads (workloads that don’t send many long-running queries).

I explore ways to make schema changes that run concurrently with an OLTP workload. What kinds of changes are easy to deploy concurrently and what kind of changes are enemies of concurrency?

Co-ordination is Key

This series is meant to help people investing in automation and other process improvements. It takes careful co-ordination between those responsible for uptime and those responsible for delivering improvements. So in your organization, if Dev Vader and DBA Calrissian are on the same team (or even the same person) then this series is especially for you.

I know this topic really well. It should be fun.

Coming Next: Blue-Green Deployment

Ooo! Looking forward to this.

Comment by Brent Ozar — January 5, 2018 @ 10:18 am

That sounds great. I’m in the middle of preparing a talk now on (near-) zero downtime database deployments, so it’ll be interesting to compare notes.

Comment by Kevin Feasel — January 8, 2018 @ 9:50 am

Hi Michael,

Found this looking at the SQL Saturday scheduled for Victoria BC. Sadly I live in Winnipeg 🙂

Zero downtime schema changes is on our road map for 2018.

Thank you very much for sharing.

Brian

Comment by Brian Peasey — January 25, 2018 @ 3:28 pm

That’s awesome Brian, I’d love to hear how you guys do. For us, it was a big project.

Good luck!

Comment by Michael J. Swart — January 25, 2018 @ 3:31 pm

Hi Michael – Nice series.

I do have environment with P2P replication setup for the zero downtime deployment (Active-Passive). It’s very complicated, however it allows us to make schema changes that results in blocking/downtime at the database such as (varchar(100) to nvarchar(400)).

How can we handle these changes with blue-aqua-green deployment.

Thanks,

Ahmad

Comment by Ahmad Osama — April 1, 2019 @ 10:44 pm

Hi Ahmad,

You’re right. Switching from varchar to nvarchar (unicode to non-unicode) will process all the data in the table.

https://git.dev.d2l/projects/CORE/repos/lp/pull-requests/12913

In this series, I have a post that gives an example.

In my example,

What you’re asking is actually the thing that I’m trying to explain in this series and I realize there’s a lot to understand.

Let me know if you find something in particular that’s not clear.

Comment by Michael J. Swart — April 3, 2019 @ 8:40 am

I understand. However, if the existing column is part of a unique index – In this case, it would be one more step to modify the index to include the new column with drop_existing and online on. However, wouldn’t affect the performance of the queries against the table being modified?

Thanks,

Ahmad

Comment by Ahmad — April 8, 2019 @ 9:01 am

Hi Ahmad,

It really depends. It could affect performance if it consumes resources (like IO or CPU) that starve other processes that want to fight for those resources.

And in other scenarios, it could actually help! By processing a table, the table’s data is cached in memory for a bit and doesn’t have to be fetched from disk.

And then again maybe you’re worried about blocking. That’s what the online=on is meant to help with, but it’s a big topic.

There’s no way for me to know what situation you’re in, you’ll have to do testing on your own in order to gain confidence.

Comment by Michael J. Swart — April 8, 2019 @ 9:12 am

Thanks for the help, I don’t have any situation, I was just brainstorming the possible exceptions for this approach and thought of clarifying it with you.

Comment by Ahmad — April 8, 2019 @ 10:55 pm

[…] Read this series from Michael J. Swart. […]

Pingback by Office Hours Speed Round, Text Edition - Brent Ozar Unlimited® — August 10, 2022 @ 9:17 am

[…] Read this. […]

Pingback by Office Hours, Short Text Answers Edition - Brent Ozar Unlimited® — September 5, 2022 @ 9:17 am