It is surprisingly difficult to delete data permanently. SQL Server has sophisticated features that prevent all kinds of data loss, but the opposite is not true. SQL Server has very few features that help you destroy data.

It is surprisingly difficult to delete data permanently. SQL Server has sophisticated features that prevent all kinds of data loss, but the opposite is not true. SQL Server has very few features that help you destroy data.

But why would anyone need to destroy data? Lots of reasons.

- Some laws and data retention policies require that data older than a certain age gets destroyed.

- Developers often ask for production data, or a subset. A DBA could decide to give them a backup having first dropped certain tables or columns that contain personally identifiable information.

There are countless other reasons why you might want to destroy data and I’m going to focus on three general use cases: destroying tables, destroying columns and destroying rows.

Straight Up Deleting Is Not Enough

I’m going to demonstrate that deleting data is not equivalent to destroying data. Deleting data is just hiding it.

First create a table, populate it and see what it looks like on disk:

CREATE DATABASE DeletedDataDemo; GO USE DeletedDataDemo; SELECT col1, col2, col3 INTO dbo.Table1 FROM ( VALUES (1, 'aaaaaaaaaaaa', 'bbbbbbbbbbbb'), (2, 'cccccccccccc', 'dddddddddddd'), (3, 'eeeeeeeeeeee', 'ffffffffffff') ) data (col1, col2, col3) DBCC TRACEON (3604); DBCC IND(DeletedDataDemo, 'dbo.Table1', 1) -- file 1, page 216 for example --update the following command to look at that page DBCC PAGE (DeletedDataDemo, 1, 216, 2); |

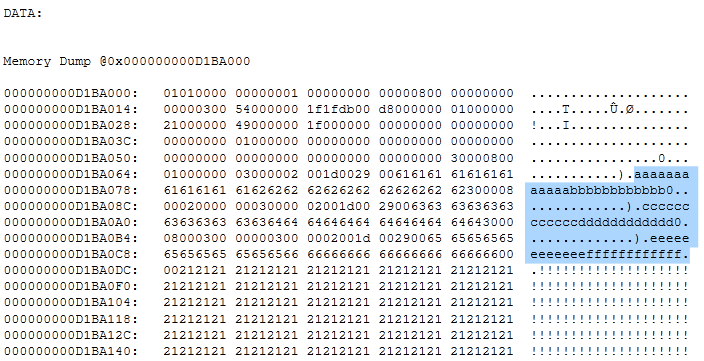

Notice that the last command DBCC PAGE shows the contents of the table as it appears on disk.

That data is still there

And now drop the table that was just created:

DROP TABLE dbo.Table1; -- or -- ALTER TABLE dbo.Table1 DROP COLUMN col3; -- or -- DELETE dbo.Table1 WHERE col1 IN (2,3); DBCC PAGE (DeletedDataDemo, 1, 216, 2); |

Run the DBCC PAGE command and notice that the data values are still there! That data cannot be queried but it remains on disk. Even though this data is in deallocated space, this data can survive backups and restores (even after checkpoints).

Repeat the demo from the beginning. But this time, instead of dropping the table, try deleting rows or dropping a column. Just like the dropped table the data remains on disk.

Try to Destroy Data by Cleaning Pages

So SQL Server doesn’t actually delete data, it hides it and deallocates the space. A ghost cleanup job can eventually clean up this data but you can’t depend on how frequently it runs. That leads to stackoverflow questions like this one: How can I securely destroy some data using sql server 2008?

One of the answers there mentions that sp_clean_db_free_space can force the cleanup to run early. Cleaning pages is exactly what’s required to get rid of that data in deallocated space. But cleaning pages consumes a lot of I/O and maybe that’s why SQL Server 2008 introduced a lot of control over the granularity of cleaning pages:

- Use

sp_clean_db_free_spacefor a whole database. - Use

sp_clean_db_file_free_spacefor a single data file. - Use

DBCC CLEANPAGE(@dbid, @fileid, @pageid)after aCHECKPOINTfor a single page.

These commands force SQL Server to write zeros to any deallocated space.

Mostly…

Dropped-Column Data Sticks Around

Columns are a special case. SQL Server doesn’t reclaim the space that is freed by dropped columns. And this dropped column data is not touched by any of the page cleaning methods. In order to destroy this data, it’s necessary to add a few manual steps. These steps can destroy column data by either overwriting column values or by rebuilding its table’s indexes.

How To Destroy Data

Here’s a table that shows the syntax to use when trying to destroy data.

| When destroying … | Use this syntax | |

|---|---|---|

| Tables |

|

|

| Rows |

|

|

| Columns (except columns in heaps) |

|

|

| Columns (alternative syntax) |

|

|

| Other data structures (Columnstore, Full-text, XML indexes, Service Broker Queues etc…) |

Not evaluated. I don’t know how long this data sticks around. |

The Lessons

Microsoft warns you that these data cleaning procedures are very I/O intensive so I would be reluctant to suggest these methods when alternatives exist.

The clumsiness of the dropped column scenario makes me wonder about other scenarios where SQL Server deletes data but doesn’t destroy it.

And this leads directly to this bit of advice:

Don’t distribute backups of databases that have contained sensitive information.

This is an excellent post. I had never thought of the data that gets left behind on the de-allocated pages, and I also was not aware of the sp_clean_db_free_space proc. I can see this only being necessary in a very small number of shops having high enough security needs to warrant the immediate destruction of data, but it is great to know the option is out there.

Comment by JeremyH — May 24, 2015 @ 1:04 pm

Thanks so much Jeremy, I appreciate the feedback.

I actually wrote this post because I came across a scenario that involved the need to securely delete data. I was surprised by the need actually. It involved providing database backups to an external party and those databases used to contain more than just the client’s data before we deleted it.

But yeah, I’m hoping this post becomes a decent resource for those tackling this kind of use case.

Comment by Michael J. Swart — May 24, 2015 @ 4:34 pm

Great post! Thank-you for taking the time out, evaluating the options and sharing them with the community. I will surely keep this in my knowledge base for any purging solutions that we develop going forward.

One of the options that I have heard about (I have not seen it in implementation – it was just part of a conversation I was having) is actually updating the data with zeroes or x’es (depending upon the data-type) and then deleting it. The line of thought presented to me was – If we need to take the I/O hit in any case, why not ensure that we know what’s remaining behind?

Comment by Nakul Vachhrajani — May 25, 2015 @ 4:54 am

Thanks Nakul,

You have to be very careful about the method you mentioned. Sometimes updating values isn’t good enough. Sometimes updating data values can cause data to move to another page. This happens because the sort order of an index depends on the changed column or maybe a page split occurs. When this happens, the update really isn’t in-place, it’s more of a delete from one place and insert into another. And when that happens, the data stays behind.

Here’s a reproduction

Comment by Michael J. Swart — May 25, 2015 @ 11:37 am

Data cannot ever be truly destroyed. Data has no beginning, and no end. Well, except in a Black Hole, which leads to the Black Hole Information Paradox: http://math.ucr.edu/home/baez/physics/Relativity/BlackHoles/info_loss.html

So, don’t blame SQL Server for something that seems to be a universal problem.

Comment by Thomas LaRock — May 26, 2015 @ 1:52 pm

True enough, but something tells me it would be smart not to ditch our disaster recovery solutions just yet. 🙂

Comment by Michael J. Swart — May 26, 2015 @ 2:08 pm

[…] It’s Hard To Destroy Data – Michael J. Swart (Blog|Twitter) […]

Pingback by (SFTW) SQL Server Links 05/06/15 - John Sansom — June 5, 2015 @ 3:01 am

[…] J. Swart’s excellent post, It’s Hard to Destroy Data, provided the impetus for me to investigate the effectiveness of the data masking used by one of my […]

Pingback by Data Masking is not enough to protect personal information from prying eyes! - SQL Server Science — January 16, 2019 @ 8:43 am