SERIES: Modifying Tables Online

In the next few days I want to describe a way to modify the definition of very large tables while keeping the table available for other queries. Some table changes are already online operations. But offline changes need extra work to keep the table available. I explore one way to do that in this series.

This article used to be a single blog post, but I broke it into four parts because it seemed too long. Read Part 1, and understand the strategy I’m using. Treat Parts 2-4 as appendixes. When you want to use this plan (or one like it), come back and use them as examples. You won’t be able to make use of the examples by cutting and pasting directly (unless you happen to be running an bike equipment store called Adventureworks), but modifications shouldn’t be too hard.

- Part 1: Migration Strategy

- Part 2: Implementation Example

- Part 3: Same Example With Error Handling

- Part 4: Testing

- Part 5: Just One More Thing

Database Schema Changes

Database schema changes are inevitable. Some of these changes can be performed quickly (like altering ntext columns to nvarchar columns). Changes like these don’t have to process every single row in the table and we say that these quick changes are online.

Of course that means there are also such things as offline changes. These are changes that need to lock the table and process each row (like adding a persisted computed column to a table). Offline changes are sometimes okay when:

- You have scheduled maintenance window to do work

- The table isn’t that large or

- the estimated amount of downtime is tolerable

But sometimes the estimated downtime is not tolerable and you want your changes to be online. Microsoft has improved and continue to improve SQL Server’s online features. For example:

- Since 2005, we’ve had online index rebuilds (and even that has improved in SQL Server 2012)

- Remus Rusanu talks about adding non-nullable columns as an online operation.

There are a few operations that are still not online. Here’s an example of one of them:

ALTER TABLE [Tablename] ADD Rowversion [Rowversion] NOT NULL |

The downtime for this table change can last seconds or minutes depending on the size of the table. If your business can’t tolerate that downtime, what options are there? I’m going to explain one strategy which helps me with these following goals:

- I want to add a rowversion column to a table. (But the strategy applies to many other offline changes).

- The straightforward alter statement would lock the table for longer than we can tolerate

- My change can place an exclusive lock on the table, but only for a short amount of time. This means that the change should work concurrently with quick transactions, but not necessarily with large bulk transactions.

- It would be an advantage if this were a database-only solution because while I might understand the applications and processes that query my database, I don’t necessarily have as much control over when or how these queries are sent.

So here’s something that might work:

The Strategy

The strategy is fairly straightforward:

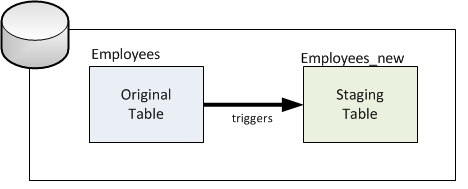

A) I create a staging table which will store the same data as the original table but with the new rowversion column. The staging table is empty at first:

B) Then I add triggers to the original table to keep the staging table up to date. Any changes in the original table will also be applied to matching data in the staging table.

C) Fill the staging table a little at a time. This processing may take time, but the original table is still available to others.

D) Finally remove triggers and rename the tables:

Some Notes

Dependencies I didn’t mention it yet, but we also have to worry about table dependencies. Tables can have a lot of dependencies (triggers, constraints, indexes etc…). You can create dependencies for the staging table, and rename them as well during the switch.

Step D, the Switch: The last step is the tricky one. It’s the one that takes a schema modification (Sch-M) lock on the tables. A Sch-M lock on the table is incompatible with every other kind of lock. So that means that no in-flight transactions are allowed to use the table during the switch. SQL Server handles it pretty well, but if there are long running queries on this table, there will be blocking.

What’s next?

- In part 2, I implement this strategy for Adventureworks’ Sales.SalesOrderHeader table which seems to demonstrate every kind of table dependency SQL Server allows.

- In part 3, I throw in error handling code to make the script more robust. So that if things go wrong, the application doesn’t notice and I don’t lose data.

- In part 4, I explain the testing I do. It gives me more confidence that I don’t miss an update or a delete somehow.

Thanks

I posted a question on dba.stackexchange.com to look for some strategies. The answer I got there (thanks Brent!) is slightly different than the solution I’m describing here. Brent described a solution which used a view that UNION’s the staging table and the original table. It uses less space than the solution I describe, but it’s got some extra gotchas.

Some of this work was done in the course of my regular job. So thanks to Desire2Learn for letting me share some of the lessons I learned there. By the way, we’re hiring.

Really looking forward to the full series and trying this out with an upcoming migration I have. It seems like your like to desire2learn.com does not work either in a browser (I am using IE 10) or Google Reader. Thanks.

Comment by Scott MacLellan — April 16, 2012 @ 9:35 pm

Thanks Scott, The link is fixed.

Comment by Michael J. Swart — April 16, 2012 @ 9:45 pm

[…] Part 1: Migration Strategy […]

Pingback by Modifying Tables Online - Part 2: Implementation Example | Michael J. Swart — April 17, 2012 @ 12:01 pm

Great article! I’ve been using change tracking in SQL Server 2008 to avoid the use of triggers. Very similar to what you describe here. http://www.sqlservercentral.com/articles/Change+Tracking/74397/

Comment by Luke Campbell — April 24, 2012 @ 10:22 am

[…] Modifying Tables Online – Part 1: Migration Strategy – A high quality walk through from Michael J. Swart (Blog|Twitter). How would you solve this task in your environment? I’m loving the artwork in this post too, it really captures/communicates that tense moment all DBAs go through. […]

Pingback by Something for the Weekend – SQL Server Links 20/04/12 - John Sansom SQL Server DBA — April 27, 2012 @ 6:02 am

[…] But it turns out there was a way; it just took a lot of effort (I blogged about that effort in this series). It’s the yellow shirt’s job to explain the “how” and “what” […]

Pingback by Lessons From Geordi La Forge | Michael J. Swart — May 14, 2012 @ 11:56 am

How do you handle duplicates / changes in the batch copy @C, the triggers in @B could have added an insert before your batch gets to it ?

Upserts?

Comment by Theo Ekelmans — June 10, 2016 @ 8:23 am

Hi Theo,

I use a MERGE statement to do that. When the batch gets to a row that’s already been inserted. It doesn’t need to insert.

There’s an example in part 2.

Comment by Michael J. Swart — June 13, 2016 @ 8:48 am

Great article. Love the Indiana meme – sums it up perfectly!!!

Comment by Chris Walsh — June 13, 2016 @ 4:48 am

[…] ran across a post from Michael J Swart that looked at ways to alter a large table while keeping the data available for querying. […]

Pingback by Making Complex Table Changes | Voice of the DBA — August 8, 2016 @ 10:16 am