Takeaway: I like to develop database stuff using rules of thumb. When two rules of thumb are at odds, what should I do? This post, I explore the advice: It depends with an example.

I first give the two rules of thumb:

Rule Of Thumb #1: Avoid Triggers

I don’t like triggers too much and I avoid them whenever possible. It’s just that they

- hide so much that might go wrong,

- They’re a huge source of frustration when debugging problem queries

- They’re often a symptom of sloppy db design and

- it’s a common mistake to write them using the assumption that the inserted virtual table contains a single row

Rule Of Thumb #2: Enforce Business Rules In The Database

It’s good to enforce business rules at the database:

- Databases often outlive the applications that are built against it

- It’s too difficult to enforce the rules in a single place only (e.g. the application). The effort needed to keep the data clean becomes unsustainable.

- They can help development. It shouldn’t need saying that if foreign keys throw errors during development, don’t get rid of them! They’re doing their job!

The Example: No Overlapping Intervals

This is where the two rules of thumb collide! It’s because not all business rules are easy to implement. Take this example, the case of preventing overlapping intervals. It’s a common scenario. Some examples are seen

- when scheduling resources (planes at a gate, weddings at a hall, meetings in rooms)

- when enforcing one thing at a time (terms of a particular office, factory equipment status)

To restrict overlapping intervals, the usual business rule enforcement tricks don’t work:

- They cannot be enforced easily with database table design (

foreign keys don’t apply because the constraint applies to a single tableSee Comments). - They can’t be enforced easily with checks because a check constraint only enforces rules on values in a single record. But the overlapping interval restriction is a restriction based on two records.

- And unique indexes can’t help either.

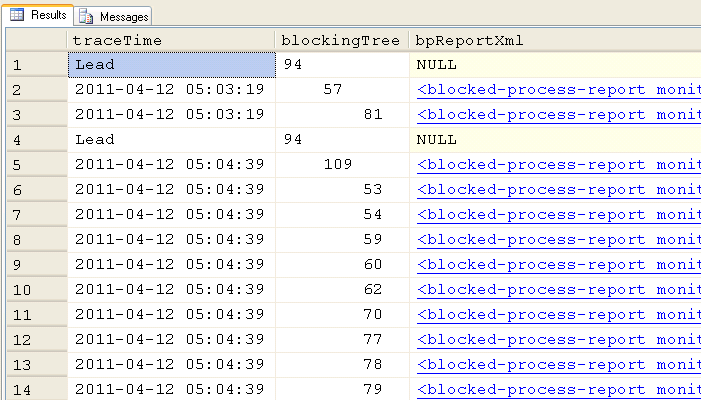

But the constraint we want to enforce could be implemented decently with a trigger. Consider this table that tracks what I call shifts:

USE tempdb GO CREATE TABLE SHIFTS ( shiftId int identity primary key, beginTime datetime2 not null, endTime datetime2 not null, check (beginTime < endTime ) ) CREATE UNIQUE INDEX IX_SHIFTS ON SHIFTS(beginTime) INCLUDE(endTime) GO |

We’ve already enforced that shifts go forward in time with a check and that no two shifts will start at the same time with a unique index. Here’s a trigger which will enforce that no two shifts overlap:

CREATE TRIGGER t_NoOverlappingShifts ON SHIFTS AFTER UPDATE, INSERT AS IF EXISTS ( --next shift starts after this one ends SELECT 1 FROM inserted CROSS APPLY ( SELECT TOP 1 beginTime FROM SHIFTS WHERE beginTime > inserted.beginTime ORDER BY beginTime ASC ) AS NextShift(beginTime) WHERE NextShift.beginTime < inserted.endTime ) BEGIN RAISERROR ('Error: Shifts cannot overlap.', 16, 1) ROLLBACK TRANSACTION END IF EXISTS ( --previous shift ends before this one starts SELECT 1 FROM inserted CROSS APPLY ( SELECT TOP 1 endTime FROM SHIFTS WHERE beginTime < inserted.beginTime ORDER BY beginTime DESC ) AS PrevShift(endTime) WHERE PrevShift.endTime > inserted.beginTime ) BEGIN RAISERROR ('Error: Shifts cannot overlap.', 16, 2) ROLLBACK TRANSACTION END GO |

The trigger performs decently, and it does its job, but it’s not suited for every situation (e.g. bulk load operations)

My Opinion

So I ask myself the question: Is it good to create the trigger in this case?. I’m going to say that this trigger is good and that the value of enforcing business rules here is better than avoiding triggers because

- the trigger doesn’t have any “side effects”. What I mean is that besides sometimes failing, the data in the database won’t be different than if the trigger didn’t exist

- the trigger handles inserts or updates of multiple rows correctly

- this trigger is not a bandaid for sloppy database design (like those maintenance triggers that keep redundant data in synch)

But I’d like to hear what you think in the comments.

P.S. It occurs to me that maybe you don’t even have the same rules of thumb that I mentioned. So what do you think? triggers: good or bad?