About eight years ago, Dan Guzman wrote a post called Use Caution with Explicit Transactions in Stored Procedures. In it, he talks about error handling and transactions, specifically with respect to the XACT_ABORT setting.

XACT_ABORT

Microsoft’s docs for XACT_ABORT are pretty clear. The setting determines whether “SQL Server automatically rolls back the current transaction when a statement raises an error”.

And in nearly every scenario I can think of that uses a transaction, this automatic rollback is the desired behavior. The problem is that it’s not the default behavior. And this leads to Dan Guzman’s advice where he strongly recommends that SET XACT_ABORT ON be included “in all stored procedures with explicit transactions unless you have a specific reason to do otherwise.”

What Could Go Wrong?

When a statement inside a transaction fails (for whatever reason) and XACT_ABORT is set to off, then…

- That transaction is abandoned.

- Any locks taken during that transaction are still held.

- Even if you close the connection from the application, .NET’s connection pooling will keep that connection alive and the transaction on SQL Server stays open.

- Fortunately, if someone reuses the same database connection from the connection pool, the old transaction will be rolled back.

- Unfortunately developers can’t count on that happening immediately.

- Abandoned transactions can cause excessive blocking leading to a concurrency traffic jam.

- Also, abandoned transactions can interfere with downstream solutions. Specifically ones that depend on the transaction log. Transaction logs can grow indefinitely. Replication solutions can suffer. If RCSI is enabled, the version store can get out of hand.

Some (or all) of those things happened to us last week.

Steps To Take

Here are some things you can do:

Do you have abandoned transactions right now?

It’s not too hard to identify these abandoned transactions:

-- do you have abandoned transactions? select p.spid, s.text as last_sql from sys.sysprocesses p cross apply sys.dm_exec_sql_text(p.sql_handle) s where p.status = 'sleeping' and p.open_tran > 0 |

Also if you use sp_whoisactive, you can identify these processes as those with a sleeping status and at least one open transaction. But there’s a trick I use to identify these quickly. The sql_text value in the output of sp_whoisactive will typically begin with CREATE PROCEDURE. When I see that, I know it’s time to check whether this connection is sleeping or not.

SET XACT_ABORT ON

Follow Dan Guzman’s advice to include SET XACT_ABORT ON in all stored procedures with explicit transactions.

You can actually find the procedures in your database that need a closer look

-- find procedures that could suffer from abandoned transactions SELECT * FROM sys.procedures where OBJECT_DEFINITION(object_id) like '%BEGIN TRAN%' and OBJECT_DEFINITION(object_id) not like '%XACT_ABORT%' order by name |

Set XACT_ABORT ON server-wide

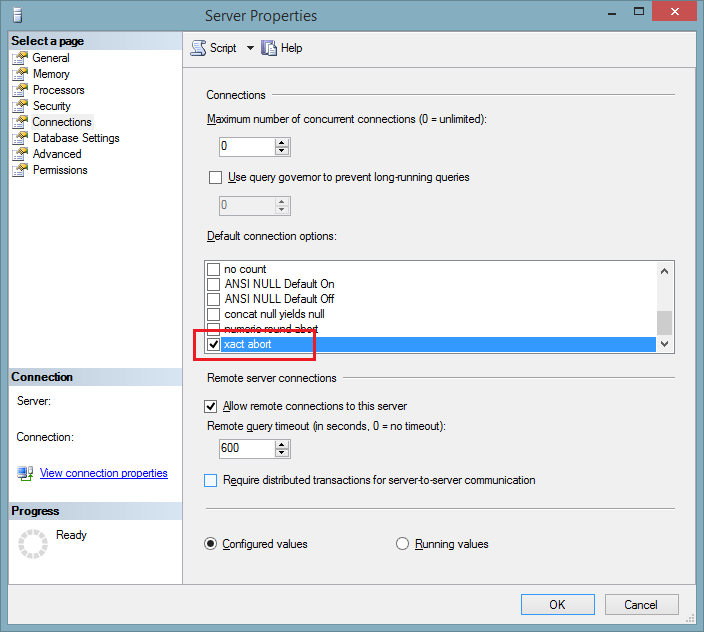

If you choose, you can decide to set the default value for all connections to your server. You can do that using Management Studio:

Or via a script:

-- turn the server's xact_abort default on declare @user_options_value bigint; select @user_options_value = cast(value as bigint) from sys.configurations where name = 'user options'; set @user_options_value = @user_options_value | 0x4000; exec sp_configure N'user options', @user_options_value; RECONFIGURE WITH OVERRIDE; -- (if necessary) turn the server's xact_abort default off declare @user_options_value bigint; select @user_options_value = cast(value as bigint) from sys.configurations where name = 'user options'; set @user_options_value = @user_options_value & 0x3fff; exec sp_configure N'user options', @user_options_value; RECONFIGURE WITH OVERRIDE; |

Code Review

I love code reviews. They’re more than just a tool for improving quality. They’re learning opportunities and teaching opportunities for all involved.

Last week, I invited readers to have a look at a procedure in a post called Code Review This Procedure. I was looking for anyone to suggest turning on XACT_ABORT as a best practice. It’s a best practice where I work, but things like this slip through. We should have caught this not just during testing, but during development. It’s obvious with hindsight. But I wanted to determine how obvious it was without that hindsight. I guess it was pretty subtle, the XACT_ABORT was not mentioned once. That’s either because the setting is not often used by most developers, or because it is easily overlooked.

But here are some other thoughts that readers had:

Concurrency

Many people pointed at concurrency and transaction isolation levels as a problem. It turns out that concurrency is very hard to do right and nearly impossible to verify by inspection. In fact one of my favorite blog posts is about getting concurrency right. It’s called Mythbusting: Concurrent Update/Insert Solutions. The lesson here is just try it.

Cody Konior (blog) submitted my favorite comment. Cody writes “I often can’t disentangle what the actual impact of various isolation levels would be so I go a different route; which is to create a quick and dirty load test”. I can’t determine concurrency solely by inspection either, which is why I never try. Cody determined that after hammering this procedure, it never failed.

He’s entirely right. Concurrency is done correctly here. Ironically, most of the fixes suggested in other people’s code reviews actually introduced concurrency issues like deadlocks or primary key violations.

People also suggested that blocking would become excessive. It turns out that throughput does not suffer either. My testing framework still managed to process 25,000 batches per second on my desktop without error.

Validating inputs

Some people pointed out that if NULL values or other incorrect values were passed in, then a foreign key violation could be thrown. And they suggested that the procedure should validate the inputs. But what then? If there’s a problem, then there are two choices. Choice one, raise no error and exit quietly which is not ideal. Or choice 2, raise a new error which is not a significant improvement over the existing implementation.

Avoiding the transaction altogether

It is possible to rewrite this procedure without using an explicit transaction. Without the explicit transaction, there’s no chance of abandoning it. And no chance of encountering the trouble that goes with abandoned transactions. But it’s still necessary to worry about concurrency. Solutions that use single statements like MERGE or INSERT...WHERE NOT EXISTS still need SERIALIZABLE and UPDLOCK.

Error handling

I think Aaron Mathison (blog) nailed it: I’m just going to quote his review entirely:

Since your EVENT_TICKETS table has required foreign keys (evidenced by NOT NULL on all columns with foreign key references) the proc should be validating that the input parameter values exist in the foreign key tables before trying to insert into EVENT_TICKETS. If it doesn’t find any one of them it should throw an error and gracefully rollback the transaction and return from the proc.

The way it’s designed currently I think you could get an error on inserting to EVENT_TICKETS that would fail the proc and leave the transaction open.

[…] this week we encountered a web-site outage caused by a database procedure. I’m going to blog about that problem (in detail) in a post scheduled for next week. But before I publish that post, I want to know how subtle or obvious the problem was. It seems […]

Pingback by Code Review This Procedure | Michael J. Swart — October 6, 2015 @ 12:06 pm

In fact SQL Server is unpredictable when it comes to aborting transactions. I never know what kinds of errors will abort the statement or the transaction. Does not seem to be documented.

Is there a way to know? I find it insane that after an error you *don’t know* whether your transaction is alive.

Comment by tobi — October 6, 2015 @ 1:37 pm

You’re right tobi. That is frustrating.

I think Erland Sommarskog made a good attempt http://www.sommarskog.se/error_handling/Part1.html

But in general, you’re right. Error handling has always been (and continues to be) a struggle. Even with (or especially because of) the introduction of TRY CATCH

Comment by Michael J. Swart — October 6, 2015 @ 1:45 pm

My approach for fixing this would be to introduce a BEGIN ATOMIC block. All errors in that block behave like exceptions in any sane language. Exceptions roll back their statement, always. When such an exception escapes the ATOMIC block the block is rolled back as if there was a safepoint right before the block. The exception continues propagating but the side-effect are undone. The transaction *always* continues.

This feature would completely fix the issue. I don’t see any reasons this could not exist.

Comment by tobi — October 6, 2015 @ 2:46 pm

@tobi, That’s a good suggestion. In fact based on an informal poll, most people believe it’s how BEGIN TRANSACTION behaves today.

Comment by Michael J. Swart — October 6, 2015 @ 3:01 pm

Yes, people believe in nested transactions. Which would be awesome to have.

Comment by tobi — October 6, 2015 @ 4:43 pm

What I can’t work out is why ON is not the default.

The main point of a transaction is all-or-nothing, so why make OFF the default?

Comment by Paul — October 6, 2015 @ 7:17 pm

Hi Paul,

I don’t know. My wild guess was that during the dawn of databases, when the only interactions with a database was between a mainframe server and a person at a terminal. When SQL was very interactive, I can imagine an operator doing a lot of work inside a transaction. If then the operator submits a query with a syntax error, then that operator now has a chance to resubmit the query, commit or rollback. And my guess was that this default was maintained through endless backwards compatibility decisions.

I’d like to believe that’s true, but I just made it up because I think it makes a good story. I honestly don’t know.

Comment by Michael J. Swart — October 6, 2015 @ 7:43 pm

I learn a lot in this exercise !

I also have trial to use Cody’s testing script to run my trial version sp, which use single statement INSERT ..SELECT… WHERE NOT EXISTS,

and surprisingly it does not fail even without UPDLOCK hint,

though I just have no confident at all to say that syntax can really achieve the desired “Atomic” behaviour.

(As the time DB engine spend to run the subquery part could be so small, so, it is just also possible that I just not try hard enough)

It leads to one thought in my mind that, for any concurrency issue related testing, it may hardly achieve “ENOUGH” testing,

as that may needs to cover as many combination of state as possible, across any “potential” conflicting programs.

I would be much appreciated if anyone can share their practice on such kind of concurrency issue related testing in their working environment

Comment by Eric — October 7, 2015 @ 3:04 am

Hi Eric,

I have my own test framework that I like to use. I blogged about it here Generating Concurrent Activity.

In my test, I found that your implementation (with or without UPDLOCK) generated primary key violations (sorry).

The difference in your test and my test could be a couple of things.

In my test framework, I included an additional step that gets executed 40% of the time. The extra step delete a random row from EVENT_TICKETS. This causes the s_EVENT_TICKETS_GetOrCreate procedure to insert more often.

The other idea is that perhaps Cody’s powershell script didn’t generate volume fast enough.

But I love that you’re trying this stuff out on your own.

Michael

Comment by Michael J. Swart — October 7, 2015 @ 8:38 am

This is exactly why I always always always set XACT_ABORT instance-wide (and then no need for it in code reviews).

Great posts!

Comment by Alex Friedman — October 8, 2015 @ 11:28 am

Hi Michael,

I follow your suggested link and use the so called Notepad + DOS Method (As I still not yet learn C#) to adapt’s Cody’s testing code there, something like below …

echo off

sqlcmd -S SERVER -E -Q “set nocount on; declare @a INT, @b INT, @c INT, @d INT; declare @i int = 0; begin try while (@i < 1000000) begin SET @a = ROUND(99 * RAND() + 1,0); SET @b = ROUND(99 * RAND() + 1,0); SET @c = ROUND(99 * RAND() + 1,0); SET @d = ROUND(99 * RAND() + 1,0); exec eftest.dbo.s_EVENT_TICKETS_GetOrCreate @a, @b, @c, @d; set @i+= 1; end; end try begin catch select ERROR_NUMBER() AS ErrorNumber, LEFT(ERROR_MESSAGE(), 255) AS ErrorMessage; end catch"

REM exit

With your reminder on adding extra procedure to delete EVENT_TICKETS alongside to allow sustain the testing, I could reproduce the key violation symptoms.

Just an excellent experience in a SQL Server course !

(Not only having lecture notes, but also having marking for the exercise I've try, offered from subject expert, all free of charge. I should owe you a bottle of beer :p )

Comment by Eric — October 9, 2015 @ 3:38 am

Thanks for those kind words Eric.

I’m glad you decided to try this stuff out. I’ve found these techniques very useful and now I’m happy to know that I’ve passed some of those on.

Comment by Michael J. Swart — October 9, 2015 @ 8:33 am

Nice post, glad to see this sort of incident being discussed in a semi-public manner. Are you sure about this part, though? “Fortunately, if another application reuses the same database connection from the connection pool, the old transaction will be rolled back.” While I think I know what you are getting at, if I take this literally I’m not sure how it’s possible for another application to use a connection that it didn’t open.

Comment by James L — October 10, 2015 @ 5:12 pm

Yep, I see how that part can be confusing. Connections look brand new for the next process to use a connection from the connection pool. I can go into more detail after the weekend but until then maybe search for “.net connection pooling” and “sp_reset_connection”.

I do have a repro and I do see these resources being held until the next guy uses the connection.

Comment by Michael J. Swart — October 10, 2015 @ 11:01 pm

If it was pooled, wouldn’t it be re-used by the *same* application, not another application?

Comment by James L — October 11, 2015 @ 5:11 am

You’re right. Good catch. In my case it’s a web server. I’ll update the text from “another application” to “another process” or “another request”.

Comment by Michael J. Swart — October 11, 2015 @ 9:09 am

OK, sorry if I’m nitpicking — mostly I just wanted to make sure I wasn’t misunderstanding something fundamental. I did really enjoy reading the post and the side discussion on connection pooling got me reading this post, which taught me some more new things: http://blogs.msdn.com/b/ialonso/archive/2012/06/04/how-to-determine-whether-a-connection-is-pooled-or-nonpooled.aspx

A few years ago I went looking for reasons not to enable XACT_ABORT as a standard practice and didn’t find any except in cases where I wanted to explicitly define how to handle different errors with TRY .. CATCH. This series of posts and the comments have reinforced that decision so far.

Comment by James L — October 11, 2015 @ 10:24 am

[…] Don’t Abandon Your Transactions – Michael J. Swart (Blog|Twitter) […]

Pingback by (SFTW) SQL Server Links 16/10/15 - John Sansom — October 16, 2015 @ 4:29 am

[…] there a bug in your code that’s causing queries not to […]

Pingback by Implicit Transactions: Why Unrelated Queries Block Each Other In SQL Server – Erik Darling Data — May 18, 2022 @ 10:48 am